Artificial intelligence (AI) promises tremendous value – analysts forecast over $15.7 trillion of added GDP by 2030. However, realizing this potential requires trusting AI‘s recommendations. This remains difficult as AI systems increase in complexity. Explainable AI (XAI) is key to enabling enterprise AI success by making opaque models understandable.

In my decade as a data analytics consultant, lack of model interpretability has been a consistent barrier to AI adoption. Whether for fraud detection or demand forecasting, clients struggle to act on "black box" systems. Explainable AI finally makes complex models transparent so humans can confidently oversee, audit, and improve them.

This guide will cover:

- The rising complexity making AI explainability urgent

- Goals and techniques of explainable AI systems

- Why enterprises need XAI for mission-critical AI now

- A framework for implementing XAI

- The future of explainable AI

Let‘s explore how enterprises can leverage XAI to ensure AI trustworthiness, accountability, and performance.

The crux of the black box problem

AI achieved remarkable feats in 2024, from chatbots like ChatGPT to AlphaFold revolutionizing protein science. However, these gains come with a tradeoff – opacity. The best performing AI models are increasingly complex black boxes.

Take natural language processing (NLP). Simple bag-of-words models have given way to multilayer neural networks like BERT and GPT-3 with billions of parameters. These systems deliver incredible results but scarcely explain their inner workings. The same trend extends across computer vision, reinforcement learning, and other AI domains, as the table below shows:

| AI Category | Older Models | Newer Models |

|---|---|---|

| NLP | Bag of words, SVM | Transformer networks (BERT, GPT-3) |

| Computer Vision | CNN, logistic regression | Masked autoencoders, capsule networks |

| Recommendation | Matrix factorization | Deep reinforcement learning |

According to an MIT Sloan survey, 87% of organizations utilizing AI rely on neural networks, which have inherently low explainability. With opaque models now dominating AI, tackling the black box problem is imperative.

What is explainable AI and why is it needed?

Explainable AI refers to techniques that describe, visualize, and debug complex AI models. XAI enables us to comprehend why algorithms make specific predictions.

Major goals of explainable AI include:

- Transparency – Opening the black box so users understand models

- Trust – Promoting human confidence in AI recommendations

- Causality – Revealing relationships between inputs and outputs

- Control – Allowing adjustment of model behavior

- Fairness – Detecting unwanted bias or discrimination

- Compliance – Meeting legal interpretability mandates

Without XAI, enterprises struggle to implement AI. Leaders hesitate to act on recommendations they don‘t fully grasp. Regulations in finance, medicine, and other fields require transparency. The chart below shows rising attention to explainable AI:

Google Scholar citations for "explainable AI" papers (Image: Author‘s analysis)

Explainability unlocks myriad benefits:

- Auditing – Checking models for fairness, safety, and compliance

- Refinement – Improving models by understanding their logic

- Control – Keeping humans in the loop on consequential decisions

- Acceptance – Encouraging user and public buy-in for AI

- Creativity – Enabling non-experts to engage with AI creatively

How explainable AI systems work

XAI employs diverse techniques to clarify how models make predictions:

Intrinsic approaches use more interpretable algorithms like decision trees, generalized additive models, or rule-based systems. These are naturally self-explanatory but less performant.

Post-hoc explanation techniques analyze any pretrained model to provide explanations without sacrificing accuracy. Examples include:

- Sensitivity analysis – Shows how changing inputs affects outputs

- Local approximation – Explains individual predictions via simpler models

- Example-based methods – Provides prototypical examples that influenced decisions

- Visualization and interaction – Enables exploration of model behavior

For instance, the local interpretable model-agnostic explanations (LIME) method samples input perturbations to train an interpretable linear model locally. Tree SHAP uses Shapley additive values to attribute predictions to input features.

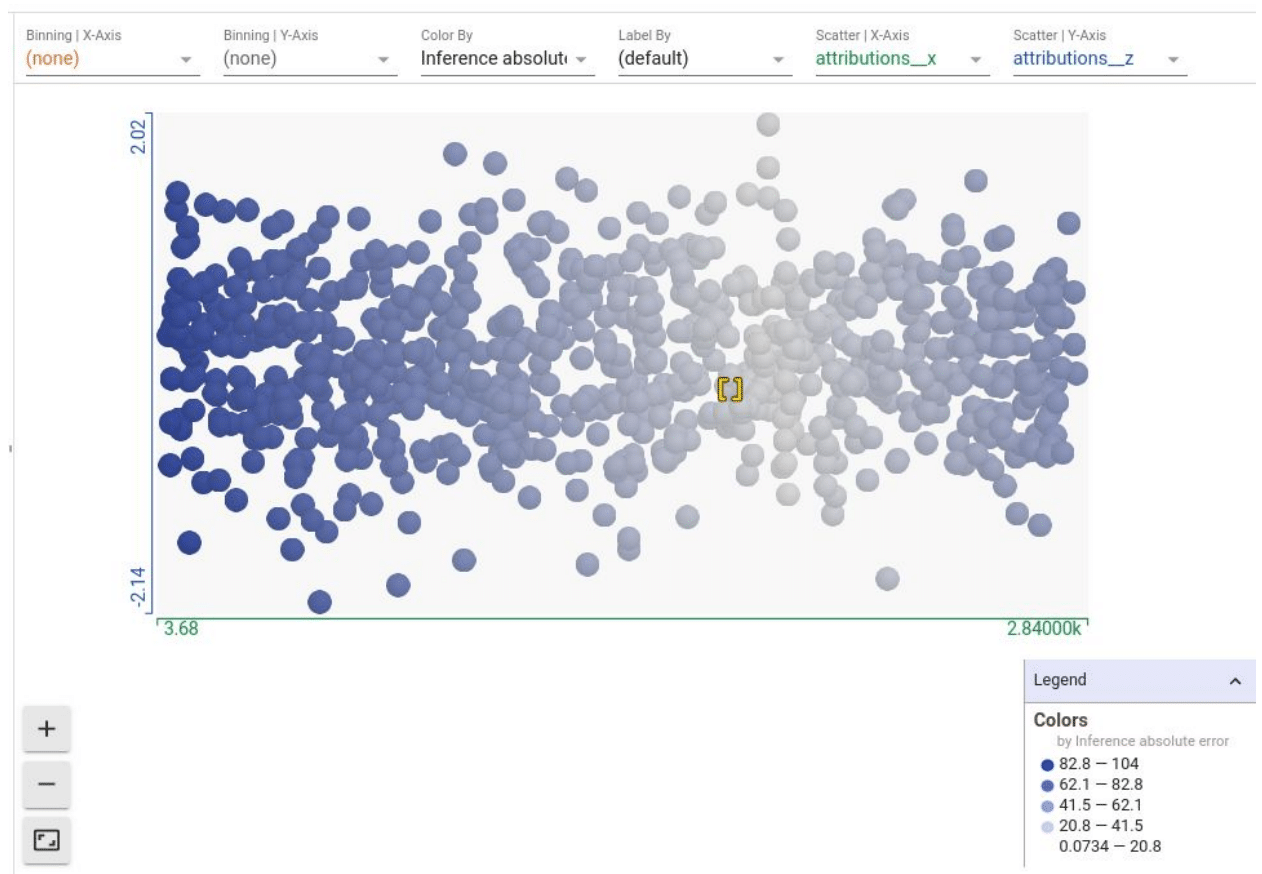

Visual analysis helps reveal model sensitivity (Image: Google Cloud)

Such techniques unpack the black box step-by-step so users can audit systems and resolve unwanted behaviors.

Implementing enterprise-scale explainable AI

To leverage XAI, organizations should:

Prioritize needs – Identify high-impact processes needing more transparency like loan approvals. Talk to skeptics and compile requirements.

Catalog existing models – Inventory AI systems and their inherent interpretability. Favor transparent models where possible.

Scan vendors and startups – Survey the XAI technology landscape. Evaluate third-party tools versus internal options.

Test proof-of-concepts – Prototype on low-stakes applications first. Compare explainability techniques.

Layer in iteratively – Add explainability incrementally across departments. Get continuous user feedback to guide tooling decisions.

Formalize model documentation – Standardize transparent model cards and require explanation reporting.

Plan for evolution – Given XAI‘s nascence, expect techniques to rapidly improve. Maintain flexibility.

Monitor for new needs – As AI expands in scope, reassess pain points and explanation adequacy frequently.

With deliberate XAI adoption, enterprises gain indispensable visibility into otherwise-opaque systems.

The future of AI explainability

Explainable AI remains an open challenge. While current techniques prove useful, innovation continues across:

- Multimodal explanation – Combining visual, textual, and interactive formats

- Example-based techniques – Providing intuitive prototypical examples that influenced model decisions

- Counterfactual analysis – Interfaces for interactively exploring how tweaking inputs alters outputs

- Scientific testing – Rigorously evaluating explanation methods with user studies

- Personalized explanation – Tailoring transparency to various user needs and contexts

As XAI matures, easier integration into ML workflows will accelerate enterprise adoption. Cloud platforms are rapidly unveiling turnkey explainability tools, with Google Cloud, AWS, and Microsoft Azure all introducing XAI capabilities in 2024. The market for dedicated XAI software is forecasted to surpass $1 billion by 2026.

With superior accountability, AI systems will expand into new terrain like scientific discovery, education, and the arts – opening up creative new partnerships between humans and intelligent machines.

Conclusion

For enterprises pursuing ambitious AI agendas, explainability is no longer optional – it is essential for competitive advantage, legal compliance, and public trust. Organizations that embrace XAI will build stakeholder confidence, accelerate adoption, and tap AI‘s full potential. They will intimately understand how their AI arrives at results while keeping humans securely in control.

Of course, explainable AI is not a panacea. True AI transparency will require sustained collaboration between technology innovators, domain experts, social scientists, and ethicists. But rapid advances are unlocking interpretable AI to power our most important systems – financial, medical, judicial, and beyond. By dispelling opacity, enterprises can deploy AI that is not only state-of-the-art but ethically and socially responsible.