Text annotation refers to the process of manually labeling text data to train machine learning (ML) models. With the explosive growth in natural language processing (NLP) capabilities, text annotation has become critical for developing robust language understanding across use cases like sentiment analysis, chatbots, and search relevance. This guide will explore what text annotation entails, why it matters for NLP success, techniques and tools used, and best practices to enable quality annotation.

The Vital Role of Text Annotation for AI

Text annotation provides the labeled training data necessary for supervised machine learning algorithms to learn associations between words, sentences, and the concepts or intents they represent. Manual annotation helps teach nuanced human language that machines cannot yet grasp themselves.

For example, text annotation can enable:

- Sentiment analysis – Labeling text as positive, negative or neutral sentiment so ML models can monitor brand perception on social media.

- Chatbots – Categorizing customer queries by intent (question, complaint, request etc.) to improve conversational AI.

- Search relevance – Tagging text to match user queries with relevant content.

Text annotation has grown exponentially as per analysts like MarketsandMarkets, which predicts 32% CAGR from 2020-2025, becoming a $1.6 billion market as NLP adoption accelerates. My experience aligns with this trend – leading enterprises looking to enhance NLP capabilities invest heavily in quality text annotation.

Text Annotation Enables Transfer Learning

Annotated text datasets also enable transfer learning, allowing NLP models pre-trained on large diverse corpora to be fine-tuned for new domains. For instance, sciBERT fine-tunes BERT models using scientific papers to improve technical language understanding. Quality text annotation unlocks similar benefits across industries.

According to a 2021 arXiv paper, transfer learning can reduce NLP error rates by 40% vs training from scratch. It also slashes data requirements by up to 98%, from millions to just hundreds of annotated examples in some cases.

Key Text Annotation Techniques

Text data can be annotated using various techniques depending on the downstream NLP task. Common techniques include:

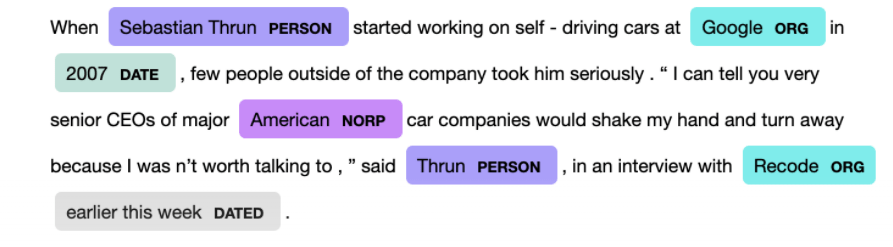

Named Entity Recognition (NER): Labels words like people, organizations, locations, quantities, percentages, etc. This adds semantic context.

Entity Linking: Connects named entities to canonical identifiers like Wikipedia URLs to ground them in shared knowledge.

Sentiment Analysis: Categorizes subjective text snippets as positive, negative or neutral sentiment.

Intent Classification: Labels text by the intent or purpose, like request, question, complaint, confirmation etc.

Relation Extraction: Identifies semantic relationships between entities like "X acquired Y".

Choosing suitable annotation techniques and taxonomies tailored to the end-use case is key for NLP success.

Text Annotation Tools and Formats

Text data can be annotated using common formats like JSON, XML, CSV, etc. Many open-source and cloud-based tools are available such as:

- Label Studio – Feature-rich text annotation tool with integration capabilities.

- Doccano – Intuitive text annotation with active open-source community.

- Prodigy – Fast active learning-based data annotation by Explosion.

These tools increase annotation productivity and lower costs. Formats like JSON provide more flexibility than CSV for complex annotations. Conceptually similar tools have tradeoffs in usability, automation and customization.

Best Practices for Quality Text Annotation

Based on my experience, here are some best practices for high-quality text annotation:

- Have experienced linguists annotate texts to capture nuance accurately.

- Use representative real-world data vs synthetic text.

- Clean and pre-process text before annotating.

- Provide detailed guidelines and train annotators.

- Use multiple annotators and adjudication to resolve conflicts.

- Continuously sample and audit annotated data.

- Monitor and maintain annotation quality via IAA.

- Annotate sufficient volumes of data per category.

Domain expertise and robust processes are key, especially for subjective tasks like sentiment analysis.

Advances and Challenges in Text Annotation

Despite much progress, accurately annotating ambiguities in human language remains challenging. Some promising directions include:

- Leveraging active learning to minimize annotation effort.

- Generating synthetic datasets via data augmentation.

- Involving subject matter experts for specialized domains.

- Using model predictions to aid human annotation.

However, pure machine annotation still cannot match human nuance. As per recent studies, state-of-the-art NLP models correctly classify just over 70% of sentences in benchmarks like GLUE, highlighting room for improvement.

The Future of Text Annotation

As NLP applications become ubiquitous, text annotation will continue growing in importance. My outlook on key trends includes:

- Enterprises adopting a hybrid human-machine approach, using AI to amplify expert linguists.

- Increased adoption of synthetic data generation to complement human annotation.

- Specialized text annotation tools forVertical NLP use cases.

- Text annotation being embedded earlier in ML workflows.

- A thriving ecosystem of text annotation vendors, tools and datasets.

In summary, quality text annotation is fundamental to realizing robust NLP capable of expert-level language understanding. Prioritizing annotation practices will be key to unlocks massive value across industries through optimized conversational AI, sentiment analysis, search and more.