The rapid adoption of artificial intelligence (AI) across industries is transforming business and society. However, as reinforced by high-profile cases like Apple‘s gender-biased credit decisions, the incredible possibilities enabled by AI also come with great responsibilities.

Developing AI systems according to sound ethical principles is no longer optional for organizations – it is a prerequisite for earning public trust and avoiding pitfalls.

In this comprehensive guide as an AI ethics consultant, I explore the four foundational principles for responsible AI – fairness, privacy, security, and transparency. I substantiate each principle with real-world examples, data, analysis, and best practices based on my decade of experience in data engineering and machine learning.

The Accelerating Pace of AI Adoption

The capabilities of AI systems are advancing rapidly, leading to surging adoption. According to a 2020 McKinsey survey, the number of companies implementing AI grew by 270% from 2015 to 2019.

The AI market is exploding as well. Per IDC forecasts, worldwide spending on AI solutions will climb from $85.3 billion in 2024 to over $204 billion in 2027, representing a 23.6% compound annual growth rate.

What‘s driving this trend? AI is creating tremendous value across sectors:

-

In healthcare, AI is analyzing medical images more accurately than humans to support diagnoses and treatment.

-

For financial services, AI is detecting fraud and risks with higher accuracy to strengthen security.

-

In manufacturing, AI is optimizing supply chains resulting in increased resilience and lower costs.

-

Across industries, AI chatbots are providing better customer service 24/7 through natural conversations.

However, the incredible progress also surfaces ethical concerns. Most organizations struggle to develop AI responsibly, per an Accenture study in which around 65% of risk leaders admitted lacking full capability to assess AI risks.

Ignoring responsible AI principles can lead organizations down perilous paths, with costly consequences:

-

After claims of gender bias, the Apple card is now under investigation by a federal regulator, the CFPB.

-

Recruitment AI tools that exhibited racial or gender bias have faced lawsuits – in one case, resulting in a $1.5 million settlement.

-

An AI-powered chatbot named Tay deployed by Microsoft turned into a fountain of hate speech within 24 hours, forcing its removal.

These examples highlight why acting ethically is no longer optional as AI becomes widespread. Next, let‘s examine the four central principles for responsible AI.

1. Fairness: Eliminating Bias for Inclusive AI

For AI systems to be deemed fair and trustworthy, they must serve all user groups equitably without exhibiting unintended prejudice or discrimination. However, real-world data often contains ingrained societal biases. If not proactively addressed, those biases can lead AI models to make systematically unfair decisions that disadvantage certain demographics.

Consider the following examples of biased AI systems uncovered in recent years:

-

In 2019, multiple allegations surfaced that Apple‘s credit card algorithms discriminated against women by offering them lower credit limits compared to men with similar financial profiles. The issue sparked a viral #AppleCardIsSexist protest on social media and led to a federal investigation by the Consumer Financial Protection Bureau (CFPB). Though Apple denied any intentional gender bias, the damage was done to its brand reputation.

-

According to a 2021 study by Harvard Business School, biased recruiting algorithms potentially prevent over 27 million US workers from finding jobs. These "hidden workers" filtered out by AI systems include women, people with physical disabilities, ethnic minorities, LGBTQ individuals and others with non-traditional resumes.

-

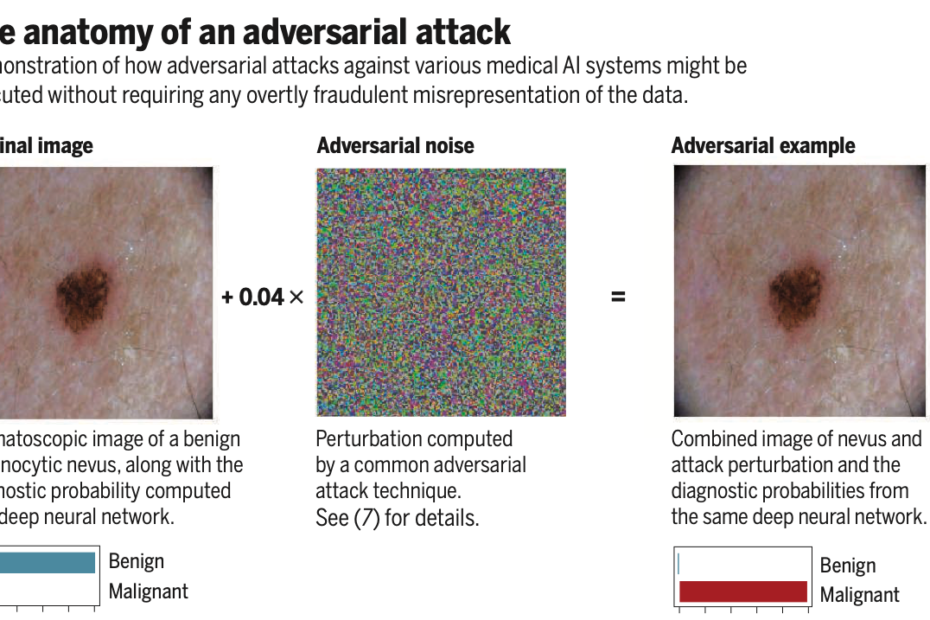

Analysis of major facial recognition tools like Microsoft‘s and Amazon‘s found accuracy rates for dark-skinned women to be as low as 65%, while light-skinned men had minimal errors (see Figure 1). The racial bias makes these tools unreliable for sensitive use cases like law enforcement.

Figure 1: Independent tests found major facial recognition tools exhibited significant racial and gender bias in accuracy (Image source: Harvard University)

Such unfair outcomes often arise due to two root causes:

-

Biased data: Training datasets reflect societal biases if not vigilantly curated. For instance, AI recruiting tools inherit gender biases if trained mainly on resumes of male candidates historically favored in technical fields.

-

Biased algorithms: Choices in data preparation and modeling techniques can unintentionally disadvantage certain groups. For example, popular deep learning models struggle to accurately classify minorities underrepresented in the training data.

"We found higher error rates for every facial recognition software we tested identifying darker-skinned women. Those higher rates are unacceptable." – Joy Buolamwini, MIT Media Lab

Eliminating unfairness and bias is necessary to develop AI that serves diverse populations fairly. Next we explore best practices to address this.

Best Practices for Fair AI

Here are some proactive measures organizations can take to enhance fairness in AI systems:

-

Remove biased data: Rigorously inspect datasets and scrub or adjust any biased, non-representative or illegally collected data.

-

Improve representation: Employ techniques like oversampling underrepresented groups and synthetic data generation to reduce dataset imbalances.

-

Test for bias: Continuously run bias testing metrics on models using stratified data to detect accuracy gaps across user subgroups. Monitor metrics like statistical parity, equal opportunity, and equality of odds.

-

Apply bias mitigation: Leverage bias mitigation methods like reweighting samples, adversarial debiasing, and output constraints to reduce discrimination.

-

Diversify teams: Include diverse perspective in development teams, incorporating domain experts in ethics and social science to spot potential sources of unfairness early.

-

Enable human oversight: Implement processes for humans to review or override high-impact model predictions as a check against unintended biases.

-

Document methodology: Record details of data provenance and modelling choices to support transparency and auditing.

A proactive, rigorous approach is key to developing AI that is fair, transparent and worthy of public trust.

2. Privacy: Protecting Sensitive User Data

When developing AI using large datasets, safeguarding personal data privacy is an ethical and legal obligation. However, data breaches exposing consumers‘ sensitive information are increasingly common, creating legal, financial and reputational risks for organizations.

According to the Identity Theft Resource Center‘s 2021 report, there were 1,862 publicly reported data breaches in the US last year – a 68% rise from 2020 and 23% above the previous record high in 2017.

Healthcare saw the most breaches at 37% of the total, highlighting vulnerabilities as medical providers adopt AI and amass larger datasets. The average cost of a data breach has now reached $4.35 million for organizations.

Such incidents cause financial losses and competitive harm to businesses. But even more concerning are the dangers data breaches pose to individuals by exposing sensitive personal information. Identity theft, financial fraud and mistreatment can result when private data is leaked.

To uphold privacy principles while building AI, responsible organizations take measures including:

-

Minimize data collection: Only collect the minimal datasets required for training and expected use cases.

-

De-identify data: Use aggregation, generalization, pseudonymization and other techniques to remove personally identifiable information from datasets.

-

Anonymize data: Employ methods like k-anonymity to prevent re-identification by obscuring distinguishing user characteristics.

-

Encrypt data: Store and transmit sensitive datasets only in encrypted formats using robust protocols like AES-256.

-

Restrict data access: Have stringent data access policies and allow access only on a strictly need-to-know basis. Allow only de-identified or aggregated data for testing.

-

Enable data rights: Provide consumers transparency into what data is collected about them along with abilities to access, edit, delete or opt-out of usage per regulations like GDPR.

-

Notify breaches: Have clear incident response protocols for promptly investigating and notifying users and authorities in case of a data breach as required by laws like CCPA.

With cyber attacks and data leaks on the rise, making privacy protection a top priority is key to building trust and avoiding misuse of sensitive user data.

3. Security: Safeguarding Against Threats

To prevent harmful misuse, robust security mechanisms are imperative for AI systems. Malicious actors may attempt to compromise confidentiality, integrity or availability of AI through hacking, unauthorized access or manipulation.

Attacks targeting AI can have dangerous repercussions depending on the application. For example, misleading autonomous vehicles with fake signals could cause accidents. Poisoning a neural network powering medical diagnoses could risk patients‘ lives.

Some alarming examples of attacks successfully fooling AI systems include:

-

Researchers fooled Tesla‘s autopilot into dangerously veering into the wrong lane by strategically placing small stickers on the road to confuse its object recognition system.

-

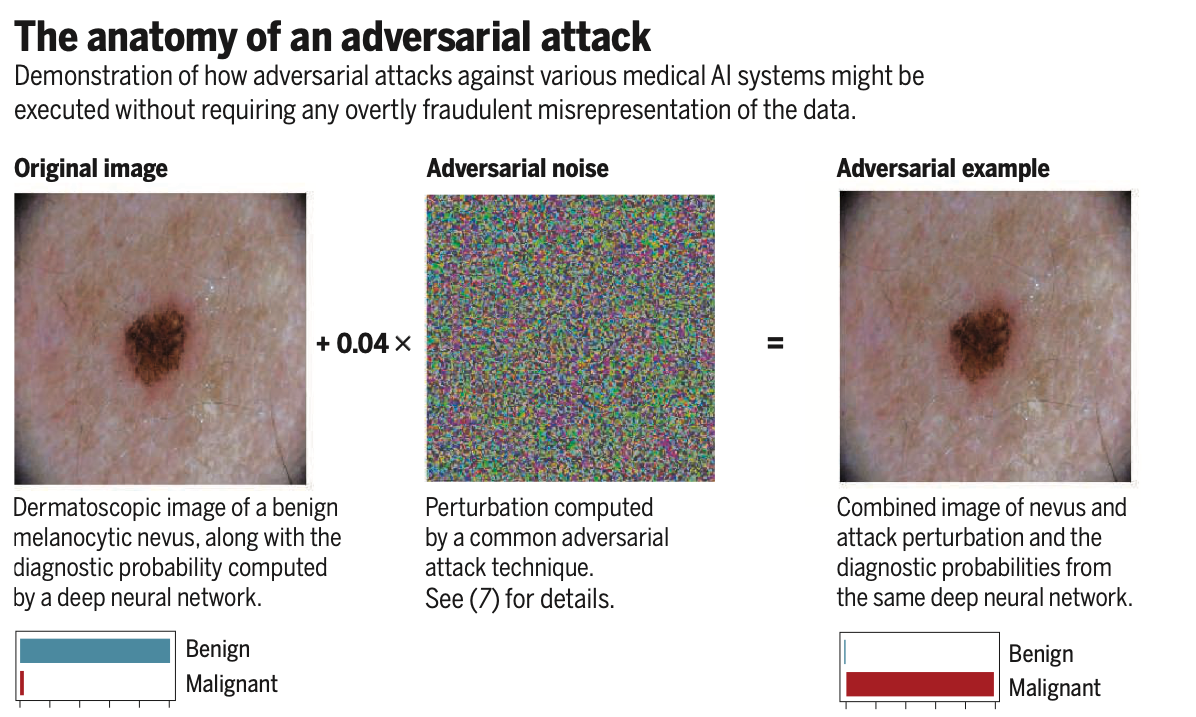

By subtly altering digital images, attackers fooled MRI-based AI diagnosis tools into mis-classifying cancerous tumors as benign, showing vulnerability to deception.

-

Cybercriminals use AI generated fake media content in over 60% of spear-phishing campaigns, tricking employees into compromising corporate networks.

The ingenuity of threat actors poses an ever-evolving threat as AI capabilities grow. Some common methods attackers employ to undermine AI systems include:

Data poisoning: Injecting corrupted, misleading data into training datasets to manipulate results.

Model poisoning: Tampering with models directly to alter their functionality.

Evasion attacks: Generating malicious adversarial inputs designed to cause incorrect predictions.

Model extraction: Stealing proprietary model information through insider access or hacking.

To fortify AI systems against breaches and exploitation, responsible organizations take measures like:

-

Risk analysis: Identify motivations and impacts of potential attacks specific to the AI application and data.

-

Security architecture: Follow best practices around secure computing, like multi-factor access controls, encryption and compartmentalization.

-

Red team testing: Proactively test systems against simulated attacks to uncover vulnerabilities early.

-

Monitoring: Detect anomalies in patterns of data or predictions that may indicate manipulation or misuse.

-

Incident response: Have plans in place for forensic analysis and remediation if an attack on AI systems occurs.

The expanding capabilities enabled by AI also expand avenues of potential misuse. Maintaining vigilant security and governance is imperative, especially for applications involving critical infrastructure or sensitive data.

4. Transparency: Enabling Trust with Explainability

For stakeholders to place trust in AI systems, they must have insight into how and why particular decisions are made. However, many advanced algorithms like deep neural networks tend to act as impenetrable "black boxes". Their complex inner workings defy easy interpretation.

Lack of transparency becomes problematic when AI noticeably errs or exhibits bias, with no recourse for redressal. Opaque AI undermines trust and accountability.

Some motivations for transparent AI systems include:

-

Informed decisions: Humans can better decide when to trust or override an AI‘s suggestion when its reasoning is explained.

-

Performance verification: Inspecting algorithms helps verify they behave as designed for the use case without deviations.

-

Failure analysis: Interpretability enables detailed auditing of undesirable model behavior or predictions.

-

Compliance: Regulations like the EU‘s General Data Protection Regulation include rights for users to explanation of algorithmic decisions affecting them.

-

Trust building: By revealing their inner logic, transparent AI systems demonstrate accuracy, fairness and reliability.

Figure 2: An adversarial attack fools an AI system into misdiagnosing a benign mole as malignant skin cancer. Lack of transparency makes detecting such vulnerabilities harder. (Image credit: Mahmood et al., 2018)

Some best practices to increase transparency in AI systems include:

-

Select interpretable models: Use inherently explainable ML models like decision trees or linear regression when accuracy needs allow.

-

Apply interpretability techniques: For complex but high-performing models like neural networks, apply methods like LIME, SHAP or integrated gradients to approximate explanations.

-

Visualize models: Use tools to visualize model architecture, decision boundaries, data flows and feature importance to provide intuitions.

-

Document methodology thoroughly: Record details of data provenance, model selection, tuning process, intended use cases and limitations to support internal and external auditing.

-

Enable user-friendly explanations: Present explanations of model logic and decisions in an intuitive, accessible way to impacted users.

Finding the right balance between accuracy and interpretability is key to enabling transparency, meeting regulations, and building trust through responsible AI.

Turning Principles into Practice

While theoretical AI ethics frameworks provide a strong foundation, realizing responsible AI requires concrete actions woven throughout development and deployment:

Assign responsibility: Appoint diverse leaders including ethicists to oversee RAI governance. Make teams accountable for ethical AI through job expectations.

Establish policies and processes: Codify principles into formal governance policies, system design guidelines and development processes. Integrate bias testing tools into workflows.

Educate teams: Raise awareness through ethics training across technical, business and operations units. Discuss case studies relevant to the organization‘s focus areas.

Perform impact assessments: Identify and mitigate risks of AI systems to stakeholders through ethical, legal and social impact reviews. Monitor for emerging risks continually.

Enable transparency: Provide clear explanations of model logic, testing process, use cases and limitations to build trust in AI systems.

Expand access: Develop inclusive AI solutions that serve people equitably, without regard to ability, language, or economic status. Provide affordable options.

Collaborate responsibly: Participate in industry coalitions promoting ethics, and help shape standards reflecting shared human values.

Monitor the landscape: Keep pace with evolving regulations, risks and public sentiments related to AI ethics across global markets.

While cultivating responsible AI has challenges, the consequences of inaction are far graver. With deliberate leadership commitment and cross-functional engagement, organizations can develop AI that is fair, accountable and aligned with human values.

The Road Ahead

AI is already impacting many facets of society, a trend sure to accelerate given massive investments. Developing AI that people can trust is both a business imperative and shared social obligation.

This guide summarized key principles, real risks, and proactive practices that comprise responsible AI. However, realizing trusted human-centric AI requires sustained engagement between companies, governments, academics and the public.

If you need help instilling responsible AI practices tailored to your organization and industry, AI Multiple‘s trusted network of AI ethics consultants can guide you. Our experts have hands-on experience auditing AI systems and providers against ethics standards.

Reach out to us at [email protected] about how we can partner with you on your organization‘s responsible AI journey. The future demands that we collectively raise the bar of responsibility as AI capabilities advance.