Natural language generation (NLG) is an exciting subfield of artificial intelligence that is rapidly disrupting content creation through automated text generation capabilities. In this comprehensive expert guide, we’ll delve into what exactly NLG is, why it’s important, how advanced systems are designed, real-world applications, limitations and ethical considerations, and an outlook on the future of this transformative technology.

What is Natural Language Generation and Why it Matters

NLG is the use of AI algorithms to automatically generate readable narrative content from structured data. In other words, it converts raw data into texts that sound natural to humans.

This content can take many forms—financial reports, product descriptions, sports game recaps, research paper summaries, and more. NLG holds enormous promise because high-quality, customized textual content is critical for organizations today, yet manual creation struggles to scale.

As the graph below illustrates, the volume of potentially valuable data is exploding. Meanwhile, customers expect personalized experiences. This is fueling demand for NLG applications.

NLG allows businesses to leverage data in new ways and automate content at unprecedented speeds and levels of personalization. According to ResearchAndMarkets, the global NLG market will grow from $375 million in 2020 to over $1.7 billion by 2027 at a CAGR of 23%. From product descriptions to financial reports and conversational bots, NLG enables the data-to-text revolution.

How Sophisticated NLG Systems Work

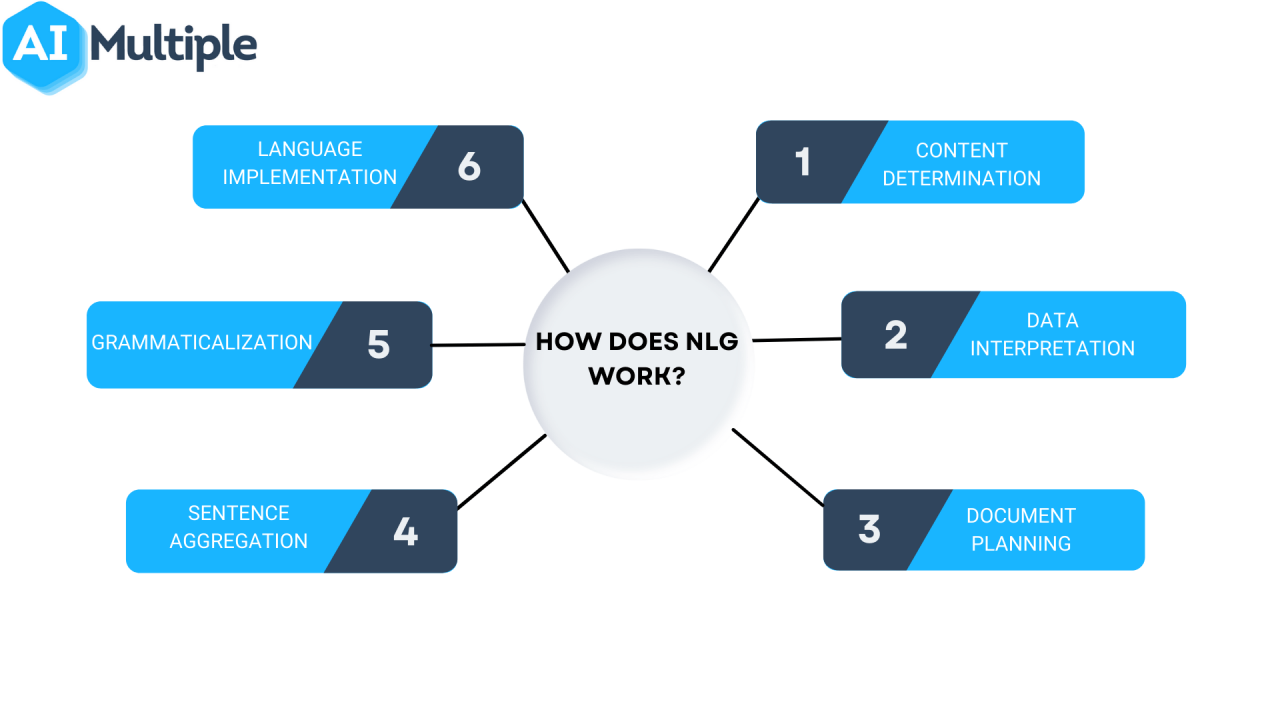

Modern NLG systems leverage various AI techniques to automate the complex process of expert text generation. There are six main stages:

1. Content Determination

First, the goals and input data parameters are defined. For example, an e-commerce firm wants product descriptions generated from details like price, materials, and usage scenarios. Subject matter experts help determine the most relevant inputs and outputs based on end goals.

2. Data Interpretation

Next, the input data is analyzed using natural language processing, information extraction, and machine learning algorithms to recognize key patterns, relationships, and meaning that will inform the text. For instance, the materials and usage data would be used to infer the product’s typical consumer profile.

3. Document Planning

The narrative structure and outline are created automatically based on the input data and desired content. This outlines what information will be conveyed and in what order it should flow logically. Our product description may start with materials and usage, transition to target consumers and buying considerations, and close with pricing and specifications.

4. Microplanning

At a granular level, the precise wording and phrasing for each sentence is determined based on the data and intended tone. Algorithms select words and linguistic constructs optimized to convey the information clearly and naturally.

5. Surface Realization

The grammar, syntax, punctuation, and spelling are finalized to output well-formed, readable sentences and paragraphs. Additional checks may correct factual errors.

6. Text Realization

Finally, the fully realized text is formatted and delivered in the required medium – plain text, webpage, chatbot interface etc.

Cutting-edge systems use neural networks and deep learning to handle increasing portions of this pipeline intelligently with less human input through end-to-end training. However, human subject matter experts still play a key role in steps like content determination and validation.

NLG Applications Across Industries

NLG is being widely adopted to automate content creation across sectors:

Journalism

The Associated Press uses Wordsmith by Automated Insights to generate thousands of quarterly earnings stories:

“Wordsmith ingests raw earnings data, understands what the data says, then generates an earnings story in AP style. It publishes these stories to the AP wire, where they land on finance sites like Yahoo! Finance and Nasdaq.”

The Washington Post’s Heliograf wrote over 500 articles during the 2016 Olympics and now generates weekly local high school sports recaps.

E-Commerce

Leading online retailers like Amazon and eBay use NLG to create customized product listings and recommendations at scale. One survey found 76% of shoppers are more likely to buy with detailed product information. NLG provides tailored microcontent matching each user’s context and profile.

Customer Support

Chatbots like Anthropic’s Claude leverage NLG to respond to customer queries with natural, conversational responses tailored to the issue raised and user persona.

Banking & Finance

JPMorgan deploys NLG for earnings previews and clients’ portfolio statements. It generates 3.5K earnings previews quarterly with NLG, freeing analysts to focus on high-value insights.

Research Summaries

Tools like SciFive automatically generate abstracts and summaries of long research papers to boost scientists‘ productivity.

While NLG shows promise across domains, there remain challenges and limitations…

Challenges and Ethical Considerations for Responsible NLG

Like any AI technology, NLG comes with risks and limitations that designers must carefully address:

Bias and Representation Issues

Since systems are trained on human-generated datasets, there is risk of perpetuating or amplifying societal biases around race, gender, culture if not proactively mitigated.

Lack of Factual Understanding

NLG systems today still lack deeper comprehension of factual knowledge and logical reasoning. This can lead to incorrect or nonsensical generated text if proper human validation is not in place.

Data Dependence

Performance is heavily reliant on large volumes of high-quality, well-structured data. Insufficient data leads to low-quality or incoherent text.

Impersonation Risks

Advanced NLG chatbots or avatars that mimic humans too closely without revealing their bot identity raise ethical concerns around deception.

Ongoing research on areas like commonsense reasoning, causality, and intent seeks to address these limitations. Responsible NLG design should also incorporate principles of AI ethics around transparency, accountability, and fairness.

As Advait Kumar, Product Manager at Anthropic, writes, "NLG systems must produce explanations justified by the input data" to ensure alignment with facts and ethical norms even as capabilities advance.

The Future: Widespread Adoption Nearing Tipping Point

What does the future look like for NLG and automated text generation? Based on rapid advances in natural language AI, NLG appears poised for massive growth and mainstream adoption in the next 5-10 years. Several developments point to this tipping point:

-

Business Demand: Need for personalized, data-driven content at scale is booming across sectors like marketing, finance, legal, and scientific publishing.

-

Better Language Models: Transformer architectures like GPT-3 and Wu Dao 2.0 demonstrate new heights in coherent, human-like text generation with minimal input.

-

Real-world Impact: Early adopters are already seeing strong results. JPMorgan "reduced earnings story production time by 85% while increasing story count five-fold" using NLG.

-

Hybrid AI Approach: Combining NLG with human creativity, judgment and QA looking most promising path to balance automation and quality.

-

Advances in Reasoning: Adding stronger abilities for comprehension, common sense and reasoning will expand possible applications.

-

Responsible Development: Growing focus on AI ethics and transparency will spur creation of accountable NLG systems that avoid harmful biases.

While challenges remain, NLG technology appears poised to transform content creation and knowledge sharing over the next decade as language AI continues to advance.