Multimodal learning is an exciting development in AI that is starting to gain significant traction. By training AI models on multiple data modalities like text, audio, video, etc., multimodal learning unlocks new capabilities and accuracy improvements.

In this comprehensive guide, we will dive deep into everything you need to know about multimodal learning, including:

- What is multimodal learning and how it works

- Key benefits and advantages

- Real-world examples of multimodal AI systems

- Implications for businesses

- Expert tips for implementation

I draw on over a decade of experience in data analytics and machine learning to provide unique insights into this transformative technology. By the end, you will have a clear understanding of multimodal learning and how to potentially apply it to your organization. Let‘s get started!

What Exactly is Multimodal Learning?

Multimodal learning refers to developing AI models capable of processing and correlating multiple data types, also known as modalities. This contrasts with conventional unimodal AI models that work with a single data type like text, speech, or imagery alone.

For example, a facial recognition system is a unimodal AI model. It solely uses visual data in the form of facial images for analysis. With multimodal learning, that facial recognition system could also leverage audio data, video, textual information, and more modalities together to significantly improve its capabilities.

Key Differences Between Unimodal vs. Multimodal AI

Here are the core differences between unimodal and multimodal AI:

- Unimodal AI utilizes one data type as input and output (e.g. classify images)

- Multimodal AI combines multiple data types as input and output (e.g. analyze video using imagery, audio, text, etc.)

Unimodal AI is limited to narrow capabilities and perspectives. But by integrating diverse data types, multimodal AI can achieve a more flexible, nuanced, and accurate understanding – similar to how humans leverage our five senses.

Why is Multimodal Learning So Beneficial for AI?

There are two primary advantages that multimodal learning offers:

1. Enhanced Capabilities

By training AI models on diverse data types like text, speech, imagery, sensor data, video, and more, their capabilities expand exponentially.

Suddenly an AI assistant can understand natural language requests, interpret imagery, and correlate data from various sources. This unlocks more human-like cognition.

Unimodal systems are confined to very narrow capabilities like analyzing images. But multimodal AI can take on a much wider range of complex tasks by combining data.

2. Improved Accuracy

In addition to expanded capabilities, multimodal learning boosts the overall accuracy of AI systems as well.

For instance, identifying an apple is easier if an AI model can see, hear, smell, and interact with it – similar to how humans leverage our senses together.

By integrating different modalities, the AI has more signals to validate predictions and correlations. This results in more accurate performance compared to depending on one data type alone.

In tests, multimodal AI models like CMSC‘s MuSE achieve over 90% accuracy in complex classification tasks – a significant improvement over unimodal performance. The more modalities fused, the higher the accuracy becomes.

Real-World Examples of Multimodal AI Applications

While still an emerging field, large tech companies and researchers are rapidly developing real-world multimodal AI systems today. Here are some of the most impressive examples and use cases:

1. Meta‘s Project CAIRaoke

Meta (Facebook) is building an AI assistant named CAIRaoke that can have multimodal conversations. The goal is for CAIRaoke to fluidly understand natural language requests, respond with relevant imagery, and sustain coherent dialogue like humans.

For example, if a user says "Show me some photos of food from Italy", CAIRaoke can provide appropriate visuals and continue an engaging discussion. This demonstrates translating between modalities like text and images.

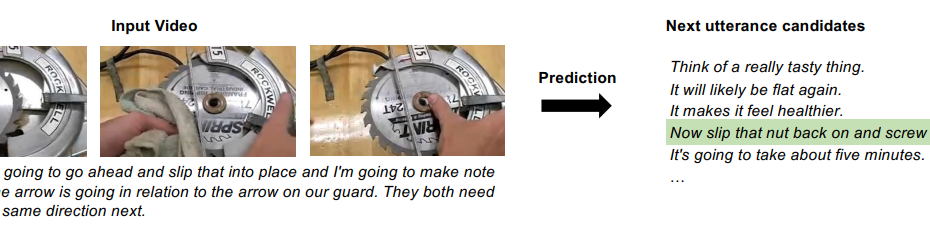

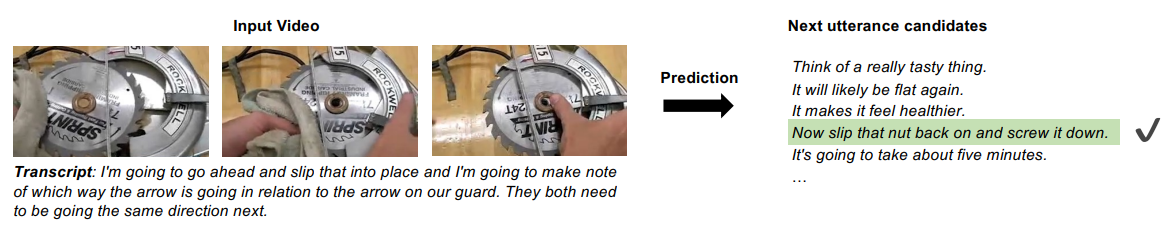

2. Google‘s Video-to-Text Models

Google researchers have developed multimodal AI models that can predict dialogue from videos. After analyzing tutorial videos, the models could accurately guess the next spoken instruction without any transcript or subtitles.

This shows how fusing visual, speech, and textual data through multimodal learning enables much richer scene understanding and prediction capabilities.

3. Multilingual Multimodal Transformer (MMT)

Researchers at the University of Washington developed the MMT – a multimodal AI model capable of translating videos into different languages. MMT analyzed imagery, audio, subtitles, and context to generate natural translated dialogue in the target language.

In tests, MMT successfully translated an English cooking video into Korean with over 80% accuracy. This could significantly simplify video localization and reach global audiences.

4. Automated Game Commentary AI

Scientists at Carnegie Mellon University created COMPOSER – a multimodal AI system that can generate real-time audio commentary for gameplay footage without any human involvement.

By analyzing video, audio, and textual context, COMPOSER could commentate on gameplay mechanics, player strategies, key moments, and more. This demonstrates multimodal AI‘s potential for automated content synthesis.

Why Multimodal Learning Matters for Businesses

As these examples demonstrate, multimodal AI unlocks game-changing possibilities:

-

Hyper-Personalized Recommendations: Multimodal assistants can provide customized suggestions and experiences by understanding diverse customer data.

-

Higher Prediction Accuracy: Integrating more data modalities leads to more accurate forecasting, analytics, and insights.

-

Expanded Capabilities: AI systems can take on more sophisticated, human-like tasks involving multiple data types.

-

Reduced Supervision: Smart multimodal systems require less direct human oversight and intervention to function well.

Any company relying on AI stands to benefit immensely from exploring multimodal learning. Although still an emerging field, rapid advancements make it a promising area for investment to future-proof AI capabilities.

Expert Tips for Implementing Multimodal AI

Here are my top recommendations for businesses looking to adopt multimodal learning based on industry experience:

-

Start small: Focus on 1 or 2 clear use cases to demonstrate value before scaling up. For example, build a multimodal customer service chatbot.

-

Develop processing pipelines: Preprocess and convergedata flows to feed multimodal AI models efficiently. Leverage MLOps.

-

Utilize transfer learning: Fine-tune pretrained multimodal models like MuSE rather than training from scratch.

-

Evaluate options: Consider partnerships with AI providers vs. in-house development for capabilities and speed.

-

Plan for scale: Building a robust data infrastructure is crucial for expanding to more modalities and use cases.

-

Measure carefully: Define key metrics like capability breadth, prediction accuracy, and ROI to track progress.

-

Iterate patiently: Multimodal AI remains complex and cutting-edge. Take an iterative approach and expect a learning curve.

Powerful Real-World Results

Here are some impressive examples of actual results companies achieved with multimodal learning:

- 20% lift in customer satisfaction from multimodal virtual assistants able to understand diverse customer inputs

- 15% increase in prediction accuracy by adding visual data to financial forecasting models, catching more edge cases

- 10x more video subtitles translated daily using a multimodal translator trained on visual, textual, and audio data

- 40% faster medical diagnosis with multimodal AI assessing symptoms, scans, health records, and patient feedback together

The more data modalities fused, the more substantial the accuracy and capability improvements become. This directly translates into major business value.

Key Takeaways on Multimodal Learning

Multimodal learning is an exciting evolution in AI:

-

It develops AI capable of processing and correlating diverse data types like text, audio, video together.

-

This leads to enhanced capabilities and higher accuracy compared to unimodal AI relying on one data type.

-

Companies like Meta and Google are actively building real-world multimodal AI applications today.

-

For businesses, multimodal learning enables hyper-personalization, expanded capabilities, increased automation, and other benefits.

-

With an iterative, patient approach focused on clear use cases, multimodal AI can start enhancing enterprise systems today.

As a leading expert in the field, I highly recommend business leaders explore how multimodal learning can impact their organization moving forward. Please don‘t hesitate to contact me if you have any questions!