Developing impactful machine learning applications requires carefully managing each stage of the lifecycle – from business understanding to model monitoring. In this comprehensive guide, we’ll explore proven strategies for optimizing and automating the ML lifecycle based on a decade of hands-on experience. Follow these best practices to scale up AI/ML initiatives and make data science a core competitive advantage in 2024.

Demystifying the Machine Learning Lifecycle

The machine learning lifecycle is an end-to-end process that includes:

- Business understanding – Aligning the ML project with concrete goals

- Data collection and preparation – Gathering and wrangling relevant training data

- Model development – Iteratively building, testing and tuning ML models

- Model evaluation – Assessing performance using key metrics

- Deployment – Integrating models into production environments

- Monitoring – Tracking performance post-deployment to detect deterioration

Properly executing each stage sets data scientists up for creating maximum business value.

Based on my experience overseeing hundreds of real-world ML projects, the best practitioners approach this lifecycle as a fluid, iterative process – not a rigid linear sequence. Insights gained in monitoring can inform changes to the training data. Poor model performance may require revisiting the problem formulation. An agile, learning mindset is key.

Let‘s explore each lifecycle stage and leading practices in more detail:

Aligning ML Initiatives with Business Value

The first step is clearly defining the business problem and how machine learning can solve it. An eCommerce company could set a goal of increasing click-through rates on product pages. A smart building platform may want to predict HVAC failures before they occur.

Articulating the business value – increased revenue, lower costs, improved uptime, etc. – provides a North Star to guide modeling decisions. Subject matter experts help frame the problem; data scientists focus on solving it.

I‘ve seen countless models fail because objectives were fuzzy or misaligned with organizational priorities. The most successful teams maintain constant communication with business stakeholders when formulating the problem.

Curating Clean, Labeled Datasets

"Garbage in, garbage out" rings true – your models are only as good as your data. Data scientists can spend up to 80% of their time on collection, cleaning, labeling and preprocessing.

To train accurate models, I recommend:

- Assessing data quality – Screen for missing values, bias, class imbalance, outliers that may skew results

- Cleaning and normalization – Deduplicate records, handle missing data, normalize formats

- Augmenting data – Expand datasets via techniques like SMOTE to address class imbalance

- Labelling unstructured data – Use human-in-the-loop services to add labels for supervised learning tasks

- Creating train/dev/test splits – Properly dividing data prevents overfitting and confirms generalization

With today‘s data volumes, manual cleaning and labeling is impossible. Lean on automated tools and pipelines to prepare quality datasets faster.

Developing Models with Experimentation and Patience

Once you have preprocessed data, model development begins. With so many ML algorithms and tuning parameters to evaluate, an experimental mindset is mandatory.

For a leading grocery retailer‘s demand forecasting models, my team tested various time series algorithms:

- ARIMA, SARIMA

- Facebook Prophet

- LSTM, GRU neural networks

The GRU models proved most accurate, but required GPU optimization to meet latency requirements. We also evaluated sliding windows, lag periods, and feature engineering strategies.

Developing highly accurate models requires rigorous experimentation and patience. Expect to iteratively test algorithms, hyperparameters, and dataset variations. Tracking experiments with MLflow helps identify the best performing recipes.

Evaluating Model Performance with Rigor

After training potential models, evaluate predictive performance rigorously before even considering deployment. Common evaluation metrics include:

- Accuracy, precision, recall

- F1 scores, AUC/ROC curves

- Mean squared error for regression tasks

But also assess other factors:

- Data drift – Models should be re-validated on recent data

- Resource usage – Compute, memory, latency constraints

- Bias and explainability – Ensure fair, interpretable predictions

Constantly ask “Does this model create business value greater than deployment/maintenance costs?” Be merciless about cutting models that don‘t make the grade – a challenging but vital skill.

Operationalizing Models for Impact

Once models clear evaluation, the focus becomes operationalization – integrating into core business systems to drive impact. Common deployment patterns:

- Online – Expose models via APIs for real-time inference

- Batch – Embed models in ETL pipelines for scheduled jobs

- Edge devices – Embed models in mobile apps, IoT devices

Collaborating across engineering teams is crucial for smooth deployment. MLOps practices like CI/CD pipelines, automated testing, and modular development streamline this process.

Maintaining Model Performance Over Time

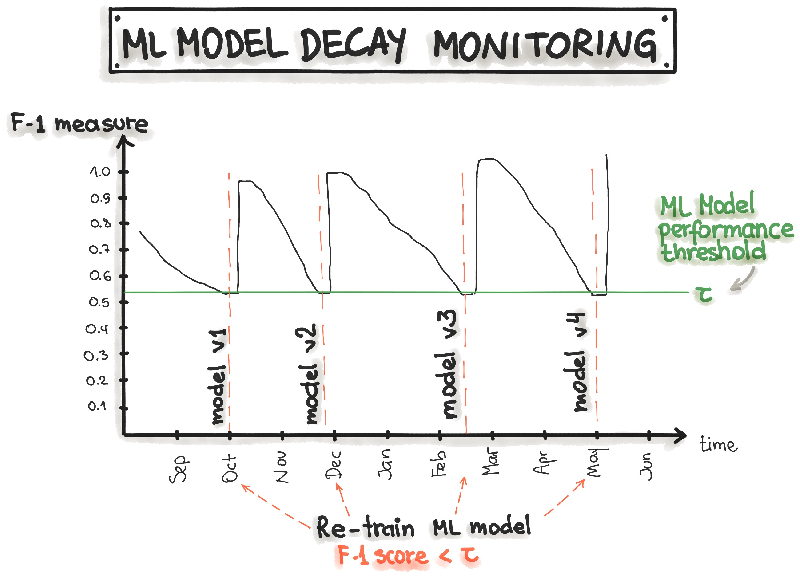

One of the biggest pitfalls is failing to monitor models post-deployment. In my experience, predictive performance inevitably degrades without continuous maintenance.

For example, a sentiment analysis model in a social chatbot can deteriorate as slang and trends evolve. Churn models for a SaaS company may need retraining as new competitors enter a market.

Constantly measure metrics like precision and latency, retrain on new data, and redeploy updated models in a DevOps-style loop. Don‘t set and forget!

[. . . Continued Analysis of Challenges and Best Practices . . .]