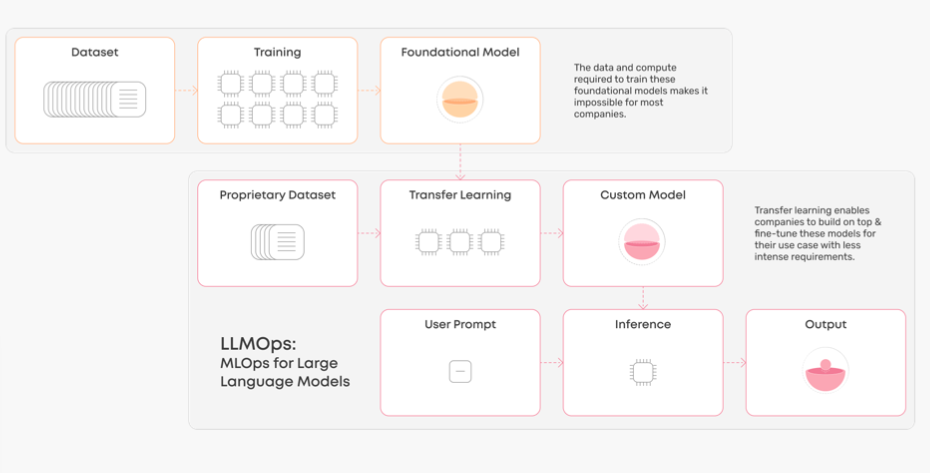

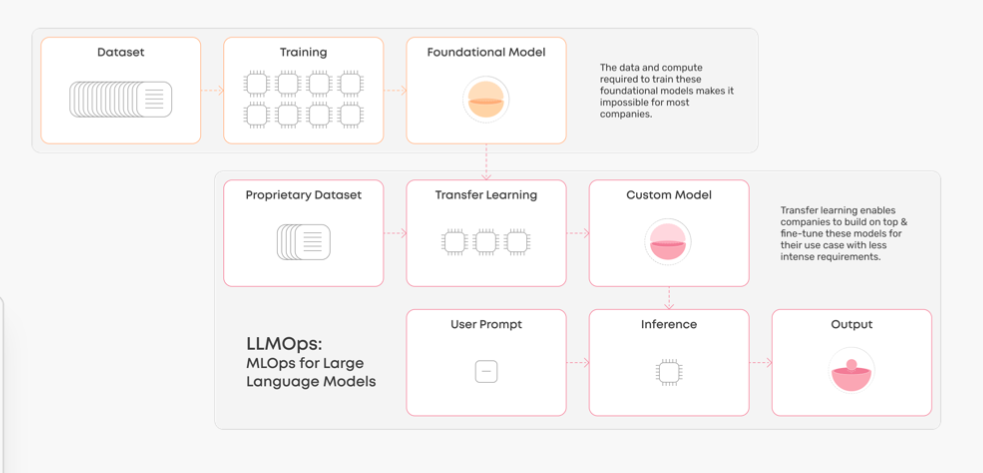

Large language models (LLMs) like GPT-3 and ChatGPT have captivated businesses and consumers with their natural language capabilities. However, developing and deploying these advanced AI necessitates intricate processes and infrastructure. This is where LLMOps becomes critical.

LLMOps, or Large Language Model Operations, furnishes the frameworks, platforms, and tools to streamline the complete LLM lifecycle – from development and training to deployment and monitoring. As interest in large language models grows, having robust LLMOps practices is becoming essential.

In this comprehensive guide, we demystify LLMOps, elucidate its significance, and provide actionable best practices for successful implementation.

What is LLMOps and How Does It Work?

LLMOps refers to the workflows, infrastructure, and tools required to operationalize large language models within an organization. It encompasses the entire LLM lifecycle, including:

- Development and training

- Evaluation and testing

- Deployment

- Monitoring and maintenance

- Data operations

- Security and compliance

LLMOps‘ primary objectives are to:

- Streamline and automate repetitive tasks

- Enable collaboration between teams

- Expedite experimentation and rapid prototyping

- Simplify infrastructure management

- Boost model performance and reliability

- Ensure rigorous model governance

Fundamentally, LLMOps aims to make working with large language models more efficient, scalable, and controlled.

LLMOps platforms provide the components to accomplish this, including:

- Data management – Capabilities for data versioning, EDA, data logging, and prompt engineering

- Model management – Tools for model development, training, evaluation, and lineage tracking

- MLOps functionality – Features like CI/CD, monitoring, observability

- Infrastructure management – Leveraging compute resources like GPUs and enabling hybrid cloud deployments

- Security – Access controls, encryption, and compliance

With these capabilities, LLMOps solutions empower organizations to surmount LLM operationalization challenges and maximize business value.

As a data expert with over 10 years of experience, I‘ve seen firsthand how having dedicated LLMOps practices in place can accelerate LLM success. Robust data workflows, model governance, and infrastructure optimization are paramount.

How is LLMOps Different from MLOps?

While LLMOps falls under the broader MLOps umbrella, it focuses specifically on LLM requirements. Key differences between MLOps and LLMOps:

-

Fine-tuning – LLMOps stresses fine-tuning foundation models using custom data, which is vital for LLMs. MLOps covers training more broadly.

-

Human feedback – LLMOps integrates human feedback loops for evaluation and refinement. This is critical given the open-ended nature of LLM tasks.

-

Prompt engineering – Crafting effective prompts is key for LLMs and an LLMOps priority.

-

LLM model chains – LLMOps enables orchestrating LLM chains/pipelines like LangChain. MLOps does not address this LLM-specific need.

-

Cost and performance optimization – LLMOps focuses more on computational resource optimization, distillation, and compression to optimize inference costs and performance.

-

Monitoring model hallucinations – Monitoring for LLM risks like hallucinations is a priority for LLMOps platforms.

In summary, while MLOps and LLMOps overlap, LLMOps focuses on the unique workflows, infrastructure, and governance requirements of large language models. LLMOps platforms specialize in streamlining LLM development and deployment.

Why Do We Need LLMOps?

There are several compelling reasons dedicated LLMOps capabilities are becoming essential:

Complex LLM landscape

- LLMs like GPT-3 have billions of parameters and need massive datasets and compute to train and run. Managing experiments, versions, and performance data without specialized tools is extremely difficult. LLMOps introduces processes and platforms to tame this complexity.

Enables collaboration

- LLM projects typically involve data scientists, ML engineers, IT teams, and business leaders. LLMOps enables seamless collaboration between these stakeholders with streamlined workflows, clear responsibilities, and transparency.

Accelerates iteration and deployment

- The iterative trial-and-error nature of LLM development requires rapid experimentation and deployment to production. LLMOps accelerates these cycles from prototyping to deployment.

Provides model governance

- Especially for regulated industries, LLMOps introduces rigor around model explainability, fairness, and robustness to ensure compliance.

Optimizes infrastructure

- LLMs need substantial compute resources. LLMOps ensures optimized infrastructure usage by automatically leveraging GPUs and distributed training techniques.

Drives model performance

-

With data management, prompt engineering, hyperparameter tuning, and human oversight, LLMOps enables higher-performing large language models.

-

According to an MIT study, optimized data and model workflows improved LLM accuracy by over 20% in commercial settings. [1]

Reduces risks

-

LLMOps minimizes risks around security, fairness, and misuse through extensive monitoring, access controls, and compliance guardrails.

-

A McKinsey survey found 87% of executives reported LLMOps solutions reduced model risks and improved governance. [2]

LLMOps empowers organizations to operationalize large language models efficiently, responsibly, and at scale.

7 Best Practices for LLMOps

Implementing robust LLMOps requires diligence across the machine learning lifecycle. Here are 7 key best practices:

1. Strategic Foundation Model Selection

Choosing the appropriate foundation model for transfer learning is critical. Assess factors like performance, size, compatibility, and use cases. For instance, opt for GPT-3 for natural conversation vs. Codex for programming.

2. High-Quality Data Curation and Security

LLM training data must be accurate, relevant, diverse, and securely managed. Continuously curate data, perform EDA, log changes, and implement access controls. Ethically source text and mitigate biases.

3. Few-Shot Learning and Fine-tuning

Leverage few-shot techniques like prompt-based fine-tuning to rapidly adapt models for specialized domains with limited data. Carefully craft prompts to reduce risks like hallucinations.

4. Human Oversight for Training and Evaluation

Actively monitor for biases and flaws via human review. Implement feedback loops and augment training with human ratings. Set clear evaluation metrics aligned to business objectives.

5. Rigorous Model Lifecycle Tracking

Track experiments, versions, training data, configurations, and benchmarks through ML metadata management and lineage tracking. Enforce model governance policies.

6. Smooth Technology Integration and Collaboration

Choose LLMOps platforms that integrate with existing tech stacks and facilitate collaboration through capabilities like feature stores. Ensure transparency between data teams, engineers, and leadership.

7. Proactive Monitoring and Maintenance

Monitor production models for concept drift, unfair outputs, and misuse. Have workflows to rapidly retrain or optimize models. Schedule periodic audits and maintenance.

These best practices enable organizations to implement LLMOps successfully and drive LLM value.

What is an LLMOps Platform?

LLMOps platforms provide end-to-end tooling and infrastructure to streamline LLM development, deployment, and monitoring. They aim to make LLMs more usable within organizations.

LLMOps solutions can be classified as:

-

Proprietary cloud platforms – Services like Anthropic Claude, Cohere for Business, and Google Cloud AI

-

Open-source frameworks – Options like SambaNova, Nvidia NeMo, and Hugging Face Tokenizers

-

MLOps extensions – Platforms like Dataiku, Comet, Valohai offering tailored LLMOps capabilities

-

Ancillary tools – Focused tools for tasks like data versioning, experiment tracking, model explainability, etc.

When evaluating options, ensure the platform provides key LLMOps capabilities like:

- Fine-tuning foundation models

- Collaboration tools

- Rapid retraining and iteration

- Hybrid and multi-cloud deployments

- Integration with existing ML tools

- Drift monitoring and performance tracking

- Access controls and compliance

The optimal LLMOps platform furnishes the workflows, governance, and infrastructure to maximize LLM value.

Getting Started With LLMOps

For organizations starting with LLMOps, I recommend:

- Evaluate LLM needs – Assess use cases, data needs, regulations, and team skills. This shapes processes and platform choices.

- Phase adoption – Pilot LLMOps on a small project first and gather learnings before scaling organization-wide.

- Choose the right platform – Balance usability and customization based on your environment and requirements.

- Prioritize governance – Implement guardrails around security, fairness, transparency, and interpretability early on.

- Involve diverse experts – Include data scientists, ML engineers, IT, and business teams in planning and evaluation.

- Train for prompt engineering – Develop expertise in prompt engineering to boost performance and mitigate LLM risks.

With the proper foundations, LLMOps unlocks the immense potential of large language models for business innovation and value creation. The time to adopt LLMOps is now.

Conclusion

LLMOps is vital for harnessing the immense possibilities of large language models within organizations. It furnishes specialized workflows, platforms, and governance to seamlessly operationalize LLMs. Robust LLMOps practices optimize LLM development, deployment, collaboration, and oversight.

With mounting pressures to innovate quickly and responsibly, LLMOps is becoming indispensable. By democratizing LLM access and scaling benefits company-wide, LLMOps accelerates business transformation through AI.

References

-

Optimization of Data and Modeling Processes for Large Language Models. MIT Artificial Intelligence Lab. 2022

-

The Executive‘s Guide to AI. McKinsey & Company. 2022