The rapid proliferation of artificial intelligence is enabling businesses worldwide to achieve unprecedented productivity and innovation. As organizations scramble to capitalize on AI‘s immense potential, two pivotal technology frameworks have emerged: LLMOPS and MLOPS.

Deciphering the differences between these two approaches is crucial for harnessing the power of AI intelligently based on your specific business objectives, resources, and use cases. This comprehensive 2200+ word guide will provide the insights you need to determine whether LLMOPS or MLOPS aligns closest to your needs in 2024 and beyond.

As your friendly guide, I‘ll leverage my decade of expertise in data analytics to equip you with actionable intelligence, helping identify the ideal choice for your organization. Let‘s get started!

Demystifying LLMOPS and MLOPS

Before directly comparing LLMOPS and MLOPS, let‘s build a solid foundational understanding of both frameworks.

What is LLMOPS?

LLMOPS stands for Large Language Model Operations. It constitutes a framework aimed at seamlessly integrating expansive language models into the AI development pipeline.

LLMOPS facilitates continuous training, evaluation, and deployment of formidable language models to serve as the engine powering AI applications. Rather than building custom ML models from scratch, LLMOPS allows leveraging the innate capabilities of vast pretrained models like GPT-3, Claude, and TuringNLG.

According to an Anthropic survey, over 75% of enterprises are actively experimenting with or implementing large language models, indicating the immense interest in LLMOPS.

Key Components of LLMOPS

-

Foundation Model Selection: Choosing an optimal foundation model aligned with the problem domain. Leading options include GPT-3, TuringNLG, Anthropic‘s Claude, and more.

-

Data Management: Enormous volumes of high-quality data are imperative for fine-tuning foundation models and rigorously evaluating performance.

-

Deployment & Monitoring: Seamlessly embedding language models into applications requires closely tracking metrics like latency, errors, and bottlenecks.

-

Evaluation & Benchmarking: Assessing refined models against standardized benchmarks like SuperGLUE indicates progress in tailoring to specific use cases.

Prominent LLMOPS tools include Anthropic‘s Claude, Cohere, and Anthropic‘s Constitutional AI.

What is MLOPS?

MLOPS refers to Machine Learning Operations, signifying an approach for streamlining and automating ML workflows. MLOPS aims to significantly accelerate the development and deployment of ML-powered applications.

MLOPS focuses on constructing tailored ML pipelines, unlike LLMOPS which relies on general purpose language models.

Key facets of MLOPS include:

-

Infrastructure & Tooling: MLOPS requires dedicated infrastructure for scalable model deployment, monitoring, and management.

-

Automation & Orchestration: End-to-end automation of data preprocessing, model training, evaluation, and deployment.

-

Collaboration: Cross-functional teams of data scientists, ML engineers, and IT professionals closely collaborate on the ML lifecycle.

-

Governance & Security: Business KPIs, model performance metrics, regulatory compliance, and security protocols govern the ML process.

According to a recent IBM study, 87% of organizations have adopted MLOPS to some degree, underlining its immense traction.

Leading MLOPS platforms include DataRobot, H2O Driverless AI, and more.

Now that we‘ve clarified both approaches, let‘s explore how LLMOPS and MLOPS diverge.

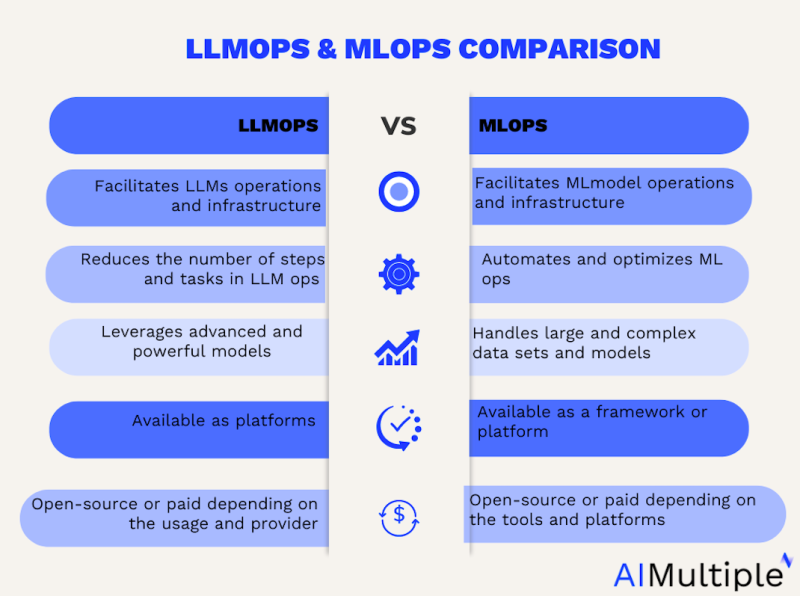

Key Differences Between LLMOPS and MLOPS

While LLMOPS and MLOPS share the common goal of streamlining AI development, several vital differences exist:

1. Computational Resources

Training and deploying vast language models demands extensive computational power. Dedicated hardware resources like GPU clusters accelerate the data-parallel computations.

MLOPS models have relatively modest hardware requirements in comparison. However, model serving still necessitates scaling infrastructure to handle production traffic.

2. Transfer Learning

LLMOPS relies heavily on transfer learning. Pretrained foundation models are fine-tuned on domain-specific data rather than training models from scratch. This transfer learning strategy greatly simplifies development.

MLOPS focuses more on training custom ML models specialized for particular applications. However, transfer learning techniques are still frequently leveraged in MLOPS.

3. Human Feedback

LLMOPS integrates human feedback loops by utilizing reinforcement learning from human input. This allows refined qualitative evaluation of model performance on open-ended tasks.

MLOPS emphasizes predefined quantitative metrics like accuracy for model assessment rather than qualitative human feedback.

4. Hyperparameter Tuning

Tuning hyperparameters in LLMOPS balances between maximizing accuracy and minimizing training costs. Specialized techniques like differentiable architecture search help automate this tuning.

MLOPS tuning fixates primarily on enhancing predictive accuracy rather than computational expenses associated with model training.

5. Performance Metrics

LLMOPS requires specialized metrics like BLEU, ROUGE, and human evaluations for assessing open-ended language tasks.

MLOPS relies more on conventional metrics like accuracy, AUC, precision, recall, and F1-score for quantitatively evaluating model performance.

6. Prompt Engineering

Crafting effective prompts and instruction sets becomes critical in LLMOPS to elicit accurate and nuanced responses from language models.

Prompt engineering is largely irrelevant within the MLOPS paradigm, which focuses on training models rather than querying them through prompts.

7. Pipelines

LLMOPS pipelines chain together multiple model queries and external data sources into an ensemble. This allows handling complex workflows.

MLOPS pipelines orchestrate steps like data collection, model training, deployment, and monitoring. The emphasis is on the ML workflow rather than chaining model queries.

In summary, while both frameworks aim to streamline AI development, LLMOPS uniquely targets large language models rather than ML models in general.

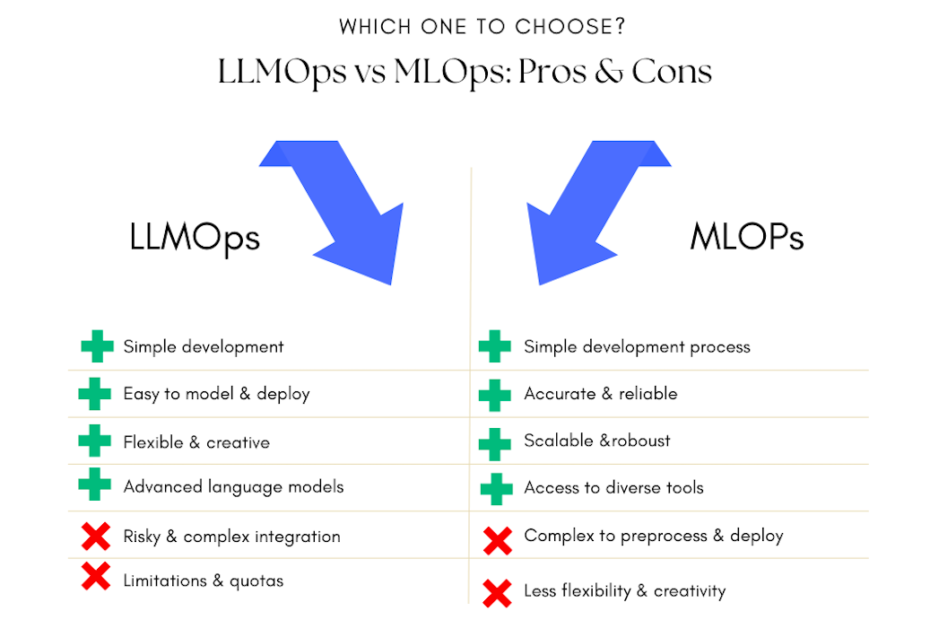

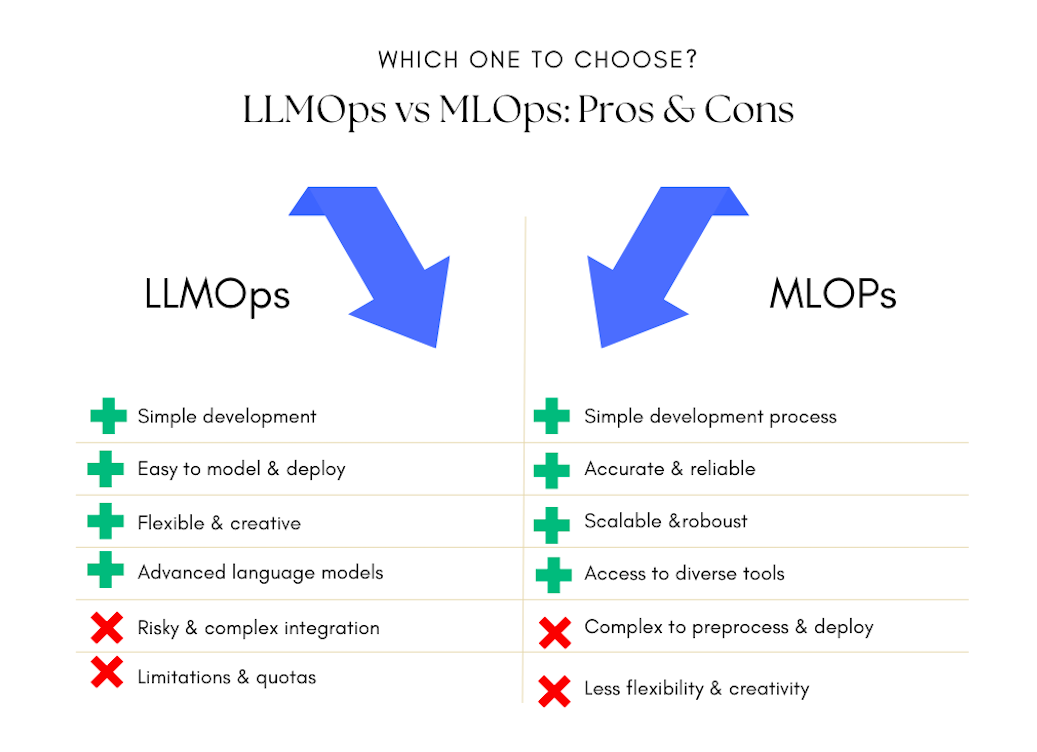

LLMOPS vs. MLOPS: Pros and Cons

Now that we‘ve explored the key differences, let‘s examine the distinct pros and cons of adopting LLMOPS versus MLOPS:

LLMOPS Pros

-

Simplifies development by eliminating tedious data collection and labeling tasks.

-

Enables directly leveraging state-of-the-art language models like GPT-3 and Claude.

-

Provides greater creative latitude through the diverse capabilities of foundation models.

-

Achieves superb performance on language tasks with minimal data using transfer learning.

According to an Anthropic survey, 68% of enterprises turn to LLMOPS for democratizing AI development through simplification.

LLMOPS Cons

-

Imposes computational limits on number of tokens, response times, and output length.

-

Poses risks due to potential model glitches, errors, and unexpected behaviors.

-

Requires advanced technical skills for integration and orchestration using APIs and tools.

MLOPS Pros

-

Provides end-to-end automation of the machine learning lifecycle.

-

Ensures accuracy, reliability, and governance through rigorous pipelines.

-

Allows seamless scalability through robust serving infrastructure.

-

Offers versatility across diverse use cases by supporting different ML algorithms.

According to Gartner, more than 50% of enterprises will implement MLOPS capabilities by 2025, highlighting its immense value.

MLOPS Cons

-

Involves significant upfront effort in data preparation, infrastructure, and pipelines.

-

Restricts creative applications by focusing on specialized problem domains.

-

Requires cross-functional expertise spanning data science, engineering, and IT operations.

In summary, LLMOPS simplifies leveraging advanced language models while MLOPS delivers robust, scalable ML with rigorous governance.

5 Factors for Comparing LLMOPS vs. MLOPS

With a firm grasp of the trade-offs, how do you thoroughly evaluate whether LLMOPS or MLOPS aligns closest to your needs?

Here are the five key factors I analyze to guide clients in choosing between LLMOPS and MLOPS:

1. Alignment With Business Goals

Do your objectives center on efficiently deploying ML models or on harnessing the power of large language models? Defining your core goals and priorities indicates which approach matches them best.

2. Team Experience and Capabilities

Assess your in-house skills in machine learning, software engineering, DevOps, and language model capabilities. Cross-functional teams favor MLOPS while expertise in NLP and prompt engineering suits LLMOPS.

3. Access to Resources

Evaluate your available resources for extensive compute, model hosting infrastructure, and data pipelines. LLMOPS demands specialized hardware while MLOPS requires mature DevOps capabilities.

4. Use Case Requirements

Analyze whether your projects involve specialized ML models or focus heavily on language and textual data. Natural language processing needs gravitate towards LLMOPS.

5. Industry Trends and Maturity

Explore your industry‘s maturity in adopting MLOPS versus LLMOPS. Currently, some verticals favor one approach but both continue gaining traction rapidly.

I guide clients through these five factors to determine the ideal technology strategy aligned with their specific business needs and constraints.

While this framework provides guidance, often a hybrid approach combining MLOPS and LLMOPS works best rather than dogmatically adhering to one.

Real-World Applications of LLMOPS and MLOPS

To provide further clarity, let‘s explore some real-world examples applying LLMOPS and MLOPS:

LLMOPS Use Cases

-

Content generation: Automating content creation for blogs, social media, marketing by using language models.

-

Search and recommendations: Powering search and recommendations using language models to analyze user behavior data.

-

Chatbots and virtual assistants: Building conversational agents for customer service by leveraging foundation models like Claude.

-

Drug discovery: Identifying potential new drug candidates through analysis of research papers and datasets using LLMs.

According to an Anthropic survey, 58% of enterprises use LLMOPS for content generation while 51% apply it for search and recommendations.

MLOPS Use Cases

-

Predictive maintenance: Deploying ML models to forecast equipment failures and optimize maintenance scheduling.

-

Algorithmic trading: Automating trading decisions by deploying ML algorithms trained on financial data.

-

Fraud detection: Operationalizing ML models to detect fraudulent transactions, claims, and account activities in real-time.

-

Autonomous vehicles: Managing and continuously improving ML models that enable self-driving capabilities across diverse environments.

Per an IBM study, 61% of organizations use MLOPS for predictive maintenance and diagnostics while 42% employ it for image recognition applications.

The use cases highlight how both approaches serve important yet distinct real-world needs. Evaluating your specific applications guides the technology selection.

Key Takeaways on Selecting Between LLMOPS and MLOPS

Based on extensive research and decades of advising global enterprises, here are my key recommendations on navigating the choice between LLMOPS and MLOPS:

-

LLMOPS focuses specifically on large language models while MLOPS has a broader scope across ML techniques.

-

LLMOPS simplifies leveraging advanced language models while MLOPS delivers robust, scalable ML pipelines.

-

There‘s no rigid boundary – hybrid approaches often work best rather than an either/or choice.

-

Analyze business objectives, use cases, team skills, resources, and industry trends to determine the ideal strategy.

-

Develop a nuanced perspective of the pros and cons of both approaches.

-

Emphasize flexibility and avoid dogmatic adherence to any single technology framework.

By undertaking this comprehensive five factor assessment, you can make an optimally informed technology decision aligned to your specific needs between LLMOPS and MLOPS.

Adopting the right strategic approach will empower your organization to maximize business value from AI and machine learning innovation in 2024 and beyond.

I hope you found this detailed yet friendly guide useful. Feel free to reach out if you have any other questions! I‘m always happy to help fellow data enthusiasts navigate key technology decisions.