Developing machine learning models involves more than just training them on data. To build models that generalize well beyond the training data, data scientists need to optimize a set of model configurations called hyperparameters. Hyperparameter optimization, also known as hyperparameter tuning, is key to maximizing model performance.

In this comprehensive guide, we‘ll explore what hyperparameters are, why tuning them matters, different optimization techniques, and tools to streamline hyperparameter search.

What are Hyperparameters?

Hyperparameters are configurations external to the model that determine how it gets trained. They are not learned or updated during training – their values need to be set before training starts.

Some examples of common hyperparameters across ML algorithms include:

- Number of trees in a random forest

- Number of hidden layers in a neural network

- Learning rate for gradient descent

- Regularization strength

These hyperparameters essentially define the architecture of the model. They control the model learning process and how well it can generalize beyond the training data.

In contrast, model parameters are learned from the data during training. Weights and biases in a neural network are examples of parameters. While parameters are internal to the model, hyperparameters are external configurations.

Types of Hyperparameters

Different machine learning algorithms have various tunable hyperparameters that have a significant impact on performance:

Neural Networks

- Number of hidden layers

- Number of units per hidden layer

- Learning rate

- Activation function choice

- Batch size

- Regularization methods (dropout, L1/L2 regularization)

Support Vector Machines

- Kernel type (linear, polynomial, radial basis function)

- Kernel coefficients

- Regularization parameter C

- Gamma parameter for radial basis function kernels

Random Forest

- Number of trees

- Maximum tree depth

- Minimum leaf size

- Number of random features per tree

Gradient Boosting Machines

- Learning rate

- Number of boosting stages

- Maximum tree depth

- Subsample ratio of training data per tree

Tuning these algorithm-specific hyperparameters along with general ones like regularization rate is key to achieving optimal model performance.

Why Tune Hyperparameters?

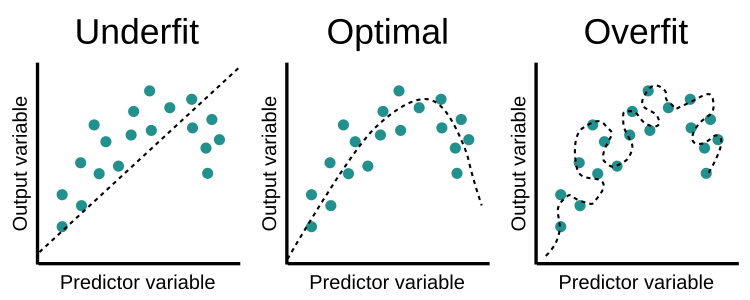

The choice of hyperparameters significantly impacts model performance. Suboptimal hyperparameter values can lead to overfitting or underfitting:

Overfitting occurs when the model fits the training data too well but fails to generalize to new data. This happens when the model becomes too complex relative to the amount and noise in the training data.

Underfitting is when the model fails to even fit the training data properly. This could be due to insufficient model complexity.

Figure 1. Examples of underfitting, overfitting, and an optimal fit. Source: educative.io

Tuning hyperparameters helps find the right balance – where the model fits the training data well but also generalizes to new unseen data. The goal of hyperparameter optimization is to find the "sweet spot" that gives the best validation performance.

For instance, increasing the depth of a decision tree can improve its fit to the training data. But setting the maximum depth too high may lead to overfitting. Hyperparameter search can help identify the ideal tree depth.

Hyperparameter Optimization Techniques

Manually testing different values for hyperparameters can be tedious for anything beyond simple models. There are several techniques, both manual and automated, for hyperparameter optimization:

Manual Search

This involves manually selecting values, training models, and picking the best configuration based on some metric like validation accuracy. Domain expertise can inform what values may work well.

While simple, manual search becomes impractical as the number of hyperparameters grows. It is also prone to testing only a limited set of values.

Grid Search

In grid search, a grid is defined by setting specific values for each hyperparameter to test. An exhaustive search is done over all the combinations of these values.

For example, trying tree depths of 5, 10, 15 and learning rates of 0.01, 0.1, 0.5 would result in 9 combinations to evaluate. The best performing combination is selected.

While more systematic than manual search, grid search can still be computationally expensive. Performance also depends heavily on how the grid values are defined.

Here is some sample pseudocode for grid search:

# Define hyperparameter grid

depth = [5, 10, 15]

learning_rate = [0.01, 0.1, 0.5]

grid = [depth, learning_rate]

# Initialize best_accuracy to 0

best_accuracy = 0

# Loop through grid to test combinations

for d in depth:

for lr in learning_rate:

# Train model with combination

model.train(max_depth=d, learning_rate=lr)

# Evaluate accuracy

accuracy = model.evaluate()

# Track best accuracy

if accuracy > best_accuracy:

best_accuracy = accuracy

best_params = [d, lr]

# Best hyperparameters are best_paramsAs shown above, grid search involves nesting loops to test a pre-defined grid of values. The best hyperparameters are then extracted based on the performance metric.

Random Search

Rather than pre-defining values, random search selects hyperparameters randomly from specified distributions. This enables sampling from a wider range of values.

The number of combinations to try can be specified instead of having to evaluate all grid points like in grid search. This greatly reduces computational expense.

Here is some sample pseudocode for random search:

# Define hyperparameter distributions

depth = UniformDistribution(min=5, max=20)

learning_rate = LogUniformDistribution(min=0.001, max=1)

# Initialize best_accuracy to 0

best_accuracy = 0

# Run for n_iterations

for i in range(n_iterations):

# Sample a combination

d = depth.sample()

lr = learning_rate.sample()

# Train model

model.train(max_depth=d, learning_rate=lr)

# Track best accuracy

accuracy = model.evaluate()

if accuracy > best_accuracy:

best_accuracy = accuracy

best_params = [d, lr]

# Return best hyperparameters

return best_paramsRather than loops, random sampling from distributions avoids testing all combinations. This is more efficient than grid search.

Bayesian Optimization

Bayesian optimization builds a probabilistic model between hyperparameter values and model performance. This surrogate model is used to guide which values to test next.

It is more efficient than random search since it leverages information from previous evaluations to pick more promising values. Bayesian optimization also better handles expensive model evaluations.

The general process is:

-

Initialize a Bayesian model between hyperparameters (x) and a performance metric like accuracy (y).

-

Sample a set of hyperparameters based on an acquisition function that uses the model‘s predicted mean and uncertainty.

-

Evaluate the metric on real model with sampled hyperparameters.

-

Update the Bayesian model with the new datapoint.

-

Repeat steps 2-4 for a certain number of iterations.

-

Return the hyperparameters with the best observed performance.

By modeling the objective function, Bayesian optimization quickly hones in on optimal regions to sample from. The acquisition function balances exploration and exploitation.

Population Based Methods

Algorithms like genetic algorithms and particle swarm optimization view hyperparameters as populations of solutions. The population evolves over generations by applying operations like mutation and crossover to converge on optimal values.

These methods can effectively navigate complex search spaces but also have a large computational footprint. They may find local rather than global optima.

Here is some pseudocode for a genetic algorithm to optimize hyperparameters:

# Initialize population of hyperparameter sets

population = []

# Evaluate initial population

for p in population:

loss = train_and_evaluate_model(p)

p.fitness = 1 / loss

for generation in range(max_generations):

# Perform selection based on fitness

parents = selection(population)

# Crossover parents to create children

children = crossover(parents)

# Mutate children

children = mutate(children)

# Evaluate children

for c in children:

loss = train_and_evaluate_model(c)

c.fitness = 1 / loss

# Select population for next generation

population = select_next_generation(population + children)

# Return best hypers after final generation

best = population[0]

return bestThe key aspects are representing hyperparameters as a population, evolving generations via crossover and mutation, and assigning fitness scores based on model performance.

Adaptive Search Methods

Techniques like gradient descent can directly optimize hyperparameters by computing gradients with respect to model performance. This is efficient but only applicable for certain differentiable hyperparameters like neural network learning rates.

Comparing Hyperparameter Optimization Techniques

| Method | Pros | Cons |

|---|---|---|

| Manual Search | Intuitive, no implementation needed | Time consuming, limited scope |

| Grid Search | Methodical, easy to parallelize | Exponentially grows with dimensions |

| Random Search | Flexible, explores diverse values | May need many samples |

| Bayesian Optimization | Sample efficient, balances exploration/exploitation | Complex implementation |

| Evolutionary Algorithms | Effective for complex high-dimensional spaces | Computationally expensive |

| Adaptive Methods | Fast for differentiable hyperparameters | Limited to only some hyperparameter types |

In general, Bayesian optimization works well for many use cases due to its sample efficiency. Random search is simple and effective for models that aren‘t too expensive to train. Grid and manual search have limited scalability but can provide adequate performance depending on the context.

Population based and adaptive techniques have niche use cases where they excel but come with downsides like high compute requirements.

Top Hyperparameter Optimization Tools

Here are some popular open source and commercial tools for hyperparameter tuning:

Hyperopt – A Python library for randomized optimization using tree-structured Parzen estimators.

Optuna – An auto-tuning framework for optimizing machine learning hyperparameters. Has integrations with ML frameworks like PyTorch.

Scikit-optimize – A simple Python implementation of Bayesian optimization using Gaussian processes.

SigOpt – SaaS platform for optimizing ML experiments and hyperparameters using Bayesian methods.

Talos – Open source hyperparameter scanning and intelligence engine for Keras models.

Google Vizier – Cloud service for black-box optimization and hyperparameter tuning.

MLOps platforms like Comet, Weights & Biases, and Valohai also provide experiment tracking and hyperparameter optimization capabilities.

Here is a comparison of some key features across tools:

| Tool | Open source | Search algorithms | Concurrency | Visualizations | Integrations |

|---|---|---|---|---|---|

| Hyperopt | Yes | Random, TPE | Yes | Minimal | Scikit-learn, PyTorch |

| Optuna | Yes | TPE, CMA-ES | Yes | Good | FastAI, PyTorch, XGBoost |

| Scikit-optimize | Yes | Bayesian | No | Good | Scikit-learn |

| SigOpt | No | Bayesian | Yes | Good | Scikit-learn, TensorFlow |

| Talos | Yes | Random, grid | Yes | Good | Keras |

When evaluating tools, consider aspects like:

- Ease of integration with your training environment

- Flexibility in defining search space and optimization strategy

- Concurrency support for parallelizing tuning jobs

- Visualization and analysis of results

- Cost, if using a cloud platform

Best Practices for Hyperparameter Tuning

Here are some tips for effective hyperparameter optimization:

-

Reduce search space – Limit bounds for continuous hyperparameters and levels for categorical ones. Start broader and narrow as needed.

-

Modularize model code – Structure model implementations to easily specify and modify hyperparameters.

-

Leverage partial training – Initially evaluate hyperparameters using partial model training to reduce compute.

-

Train simple model first – Tune hyperparameters for a basic model before trying more complex architectures.

-

Evaluate repeatedly – Run optimization multiple times with different random seeds to account for variability.

-

Visualize results – Plot hyperparameter values against model metrics to diagnose patterns and trends.

-

Refine search strategy – Adapt technique and search space based on initial results.

Case Study: Optimizing CNN Hyperparameters for Image Classification

Let‘s go through an example of tuning hyperparameters for a convolutional neural network (CNN) model trained on the classic MNIST image classification dataset:

-

Start with reasonable defaults – 2 conv layers, 100 dense units, ReLU activations, Adam optimizer

-

Do coarse-grained random search over learning rate, # layers, # units per layer, optimizers

-

Plot model accuracy vs hyperparameters to find promising regions

-

Do fine-grained Bayesian search over learning rate, momentum, dropout regularization

-

Achieve test accuracy of 99.2% compared to 98.5% with default hyperparameters

By taking a staged approach – simple to complex, coarse to fine – we efficiently navigate the hyperparameter space for CNNs. Testing Techniques like grid and random search help narrow the scope, while Bayesian optimization fine tunes the most impactful hyperparameters.

Overall, hyperparameter optimization is crucial for developing accurate and robust machine learning models. Both simple and advanced techniques exist to automate the search for optimal configurations. Leveraging the right tools and best practices allows data scientists to efficiently navigate high-dimensional hyperparameter spaces.