Few-shot learning is an emerging technique in machine learning that promises to replicate human-like learning abilities in artificial intelligence systems. This guide provides an in-depth look at what few-shot learning is, why it matters, how it works, key applications, methods, challenges, and implementation.

What is Few-Shot Learning?

Few-shot learning refers to training machine learning models, especially deep neural networks, to learn new concepts, categories, or tasks from just a few examples.

Typically, deep learning algorithms require thousands or even millions of labeled training examples to learn patterns and build predictive models. However, by using specialized techniques, few-shot learning allows models to learn from orders of magnitude less data.

For instance, few-shot image classifiers can accurately categorize new objects after seeing just 1-5 example images per class during training. Other few-shot models can translate between languages after learning from a couple of sentence pairs, understand speech commands from just a few audio samples, or grasp new concepts from a few paragraphs of text.

The key capability is being able to rapidly learn new concepts, categories, or tasks from a small number of examples – often less than 100. This is similar to the way humans are able to quickly learn new concepts from very few examples.

Few-shot learning encompasses one-shot learning (1 example), few-shot learning (less than 10 examples), and low-shot learning (less than 1000 examples). This is in contrast to traditional deep learning, which usually requires tens of thousands to millions of examples per class to train very large models.

According to a 2021 survey, few-shot learning typically refers to 1-10 examples per class during training, while low-shot learning can range from 10-1000 examples per class.

Why is Few-Shot Learning Important?

There are several key reasons why few-shot learning has gained significant interest:

-

Data efficiency – Collecting and manually labeling thousands of training examples is incredibly expensive, time-consuming, and often infeasible. Few-shot learning promises massive gains in data efficiency for training deep learning models.

-

Learning from sparse data – Many real-world concepts like rare diseases only have a few examples available. Few-shot learning can unlock modeling and prediction from these types of long-tail distributions.

-

Continual learning – Humans and animals can continually acquire and fine-tune knowledge over a lifetime. Few-shot learning delivers a step towards online continual learning in AI systems.

-

Personalization – With few-shot learning, models can be rapidly customized to individual users with just a little implicit feedback or data from each user. This enables personalized services.

-

Cognitive science – Studying how neural networks can learn new concepts from tiny datasets provides insights into human learning capacities.

-

Accessibility – Requiring less data lowers the barrier for smaller organizations to train performant AI models. Few-shot learning may democratize access to custom machine learning.

In summary, few-shot learning opens up abilities like rapidly learning new visual concepts, adapting to user preferences, translating new languages, and recognizing rare examples – all capabilities that have been difficult for standard deep learning techniques that demand huge training sets. The business and research impacts could be profound.

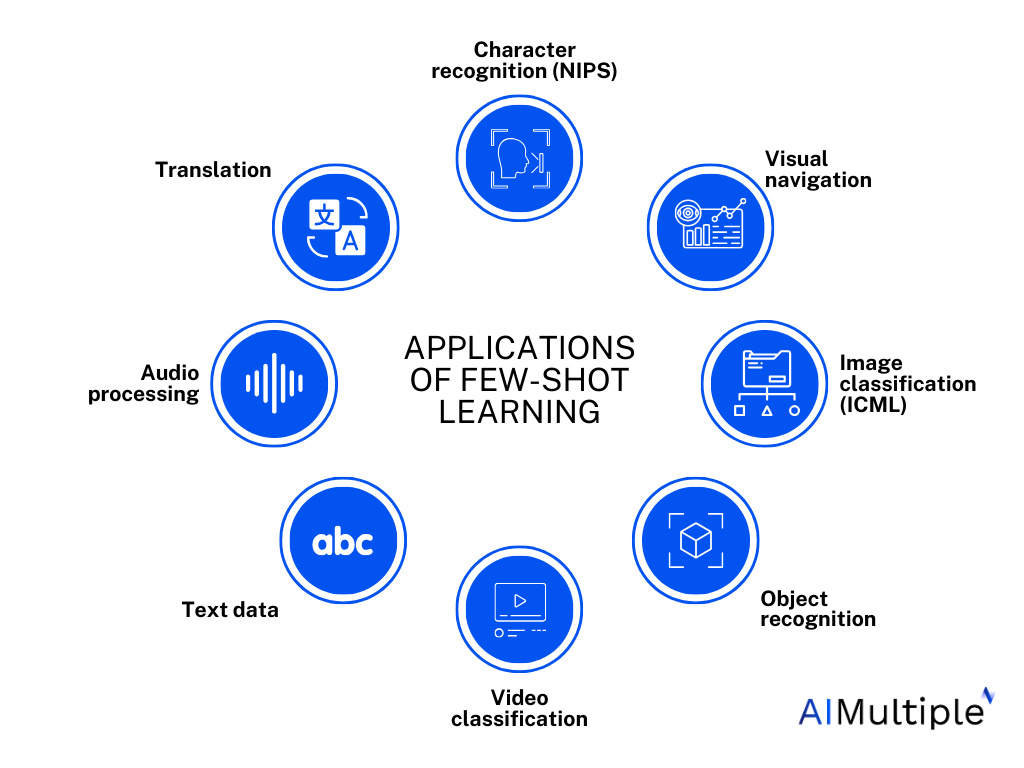

Applications of Few-Shot Learning

Here are some of the key areas where few-shot learning is being applied:

Computer Vision

Few-shot learning is especially promising for computer vision tasks like image classification, object recognition, gesture recognition, and anomaly detection in manufacturing. Example applications include:

-

Classifying rare objects or animals from a handful of photographs. For example, identifying endangered species from sparse field images.

-

Recognizing new hand gestures for human-computer interaction after learning from just a few examples.

-

Detecting manufacturing defects and anomalies after learning from a couple defective parts.

-

Identifying faces from ID photos after seeing just 1-5 examples of each person.

-

Tracking objects after training on 1-5 frames with the object labeled.

Natural Language Processing

In NLP, few-shot learning can enable textual understanding from only a couple of examples:

-

Learning new word or phrase meanings from just a few contextual examples.

-

Translating between low-resource languages where bilingual text is sparse.

-

Classifying user intents in conversational AI after very few exchanges.

-

Parsing sentences after exposure to only a couple examples of a new linguistic structure.

-

Learning to predict outcomes from legal case summaries after training on a few examples.

Drug Discovery

Discovering new medicines often starts from very limited datasets since there are few molecule candidates that exhibit desired properties. Few-shot learning models have shown promising abilities to identify promising molecules from tiny datasets during the lead generation phase.

Robotics

Robots that can dynamically adapt to new situations from a few demonstrations can better operate in open environments. Few-shot learning can enable skills like:

-

Learning new motions and gaits from a single demonstration.

-

Grasping new objects after seeing just a couple examples.

-

Navigating novel environments after training from limited sensor data.

Recommendation Systems

By quickly adapting to individual users based on just brief interaction histories, few-shot learning could enable new forms of personalized recommendations and interfaces.

These are just some of the domains that could be transformed by rapid learning from tiny training sets. Anytime labeled data is sparse or expensive to collect, few-shot learning promises to unlock new AI capabilities.

How Does Few-Shot Learning Work?

While deep learning typically requires massive datasets to train models with millions of parameters, few-shot learning relies on specialized training strategies and techniques to enable rapid learning from tiny datasets. Here are some of the main approaches:

Meta-Learning

One popular approach is to frame few-shot learning as a meta-learning problem. The model aims to "learn how to learn" – specifically, how to adapt rapidly from just a few examples.

During meta-training, the model is evaluated on a series of simulated few-shot learning tasks. By repeatedly adapting to small training sets across a distribution of learning tasks, the model acquires an inductive bias that allows it to adapt quickly when faced with new few-shot learning problems. This metalearned prior helps the model fine-tune to new concepts with limited samples.

Meta-learning framework. The algorithm is trained on simulated few-shot tasks and tested on new tasks. (Source: Borealis.ai)

Metric Learning

Some few-shot learning models use distance metric learning, where an embedding space is learned to cluster together examples from the same class while separating examples from different classes.

At test time, new classes not seen during training can be recognized based on the distance between the embedded test example and the few embedded support examples from the training set. Intuitively, examples closer together in the metric space are deemed more similar.

Data Augmentation

Advanced data augmentation techniques can artificially expand the limited few-shot training set. This includes traditional techniques like flipping, cropping, adding noise, stylization, mixing, and other perturbations.

It also includes using generative models like GANs and VAEs to synthesize additional diverse examples for the sparse training classes, producing new examples from interpolation between instances, and other data synthesis approaches.

Transfer Learning

Leveraging powerful base models pretrained on large datasets can transfer learned feature representations to the target task. Either feature extraction or fine-tuning provides strong inductive biases that reduce the sample complexity for learning new concepts.

Memory Networks

Memory-augmented neural networks incorporate external memory storage. These models can rapidly assimilate new data points into their memory banks. At test time, an attention mechanism over the stored memories allows classification from the few examples.

Model Architectures

Specialized model architectures designed for few-shot learning include graph neural networks to capture relational reasoning between all examples and transformers for representing interactions between the instances through self-attention.

In practice, combining strategies like data augmentation, metric learning, weight imprinting, freeze-thaw Bayes, and transfer learning tends to yield the best results on few-shot benchmarks. But reproducing human-level learning from tiny datasets remains an open challenge.

Comparing Zero-Shot, One-Shot, and Few-Shot Learning

It‘s worth clarifying the differences between the related terms zero-shot, one-shot, and few-shot learning:

-

Zero-Shot Learning: The model is trained to recognize completely new classes without having seen any examples from that class. Recognition relies purely on a textual description of the new class or high-level semantic attributes.

-

One-Shot Learning: The model must learn to classify new examples after being exposed to just a single example image from each new class during training.

-

Few-Shot Learning: The model is trained to generalize from small numbers of examples per class, like 1-5 examples (one shot) up to 5-100 examples (few shot).

One-shot and few-shot learning are considered supervised learning problems since the model receives a handful of labeled examples per class. In contrast, zero-shot learning operates solely on class-level descriptions without direct examples.

Few-Shot Learning Methods

Many techniques have been proposed specifically for the few-shot learning setting. Here we survey some of the most influential few-shot learning algorithms:

Matching Networks (2016)

Matching networks were an early few-shot learning approach based on differentiable nearest neighbors. A neural network learns an embedding space where examples from the same class are pulled together. At test time, embeddings for new classes can be classified by finding the closest support set embedding.

Prototypical Networks (2017)

Prototypical networks learn a metric space where examples cluster around a single prototype representation for each class. Classification is performed by calculating distance between the embedded test example and these prototypical representations.

Model-Agnostic Meta-Learning (2018)

Model-agnostic meta-learning (MAML) casts few-shot learning as a metalearning problem. The model is trained to optimize for model parameters that can quickly adapt to new tasks within a few gradient steps using limited samples.

Matching Networks (2016)

Matching networks were an early few-shot learning approach based on differentiable nearest neighbors. A neural network learns an embedding space where examples from the same class are pulled together. At test time, embeddings for new classes can be classified by finding the closest support set embedding.

Relation Networks (2018)

Relation networks design an architecture specialized for comparing two images with relational reasoning. They learn a deep distance metric for judging relations between query and support examples, achieving state-of-the-art results.

TapNet (2019)

TapNet proposes a Temporal Attentive Pooling network that leverages recurrence and attention to accumulate information across episodes. Their few-shot image classifier obtained excellent results on miniImageNet and tieredImageNet datasets.

FEAT (2020)

FEAT reformulates few-shot learning as a direct feature transfer problem. A feature generator pretrains on big datasets then transfers these fixed, frozen features to novel categories for few-shot recognition. Surprisingly simple yet effective approach.

Transformer-based Models (2020 onward)

Powerful transformer-based models like BERT have shown strong performance on few-shot NLP tasks. Fine-tuning pretrained transformers on just a few examples adapts them to downstream tasks, capturing rich linguistic patterns from pretraining.

While major advances have been made recently, few-shot learning remains an open and active area of research. Performance still significantly lags behind conventional deep learning. There is ample room for developing more human-like rapid learning algorithms.

Implementing Few-Shot Learning in Python

Here are some useful open-source libraries and repositories for implementing few-shot learning systems in Python:

-

Pytorch-Meta: A PyTorch library for few-shot learning with implementations of MAML, Prototypical Networks, Relation Nets, and more approaches.

-

Learn2Learn: Another PyTorch library for meta- and few-shot learning featuring simulated benchmarks.

-

Tensorflow-Few-Shot-Learning: TensorFlow 2 implementation of few-shot learning models like Matching and Prototypical Networks.

-

Fewshot-Siamese-Networks: Framework for one-shot image recognition using siamese networks with contrastive losses.

-

FSCE: Combines cross-entropy losses and prototypical networks for few-shot image classification.

-

Torchmeta: Torchmeta is a PyTorch library for meta-learning and few-shot learning.

These open source libraries provide modular components, reusable training pipelines, pretrained models, and options to customize architecture and loss functions for few-shot tasks.

Challenges and Open Problems

While few-shot learning has shown impressive progress in replicating human-like rapid learning, there remain considerable scientific obstacles to reaching human-level performance:

-

Learning new concepts from just a single example per class is still extremely difficult for computer vision models, yet humans can learn from a single image.

-

NLP models still require far more examples and context compared to humans to acquire new word meanings, translate new languages, or grasp new tasks.

-

Class-incremental few-shot learning poses algorithmic challenges in avoiding catastrophic forgetting of original training classes when adapting to novel classes.

-

Learning abstract concepts from sparse examples, leveraging high-level background knowledge, and making creative inferences remain limited in current AI systems.

Although deep learning has surpassed human performance on tasks with massive datasets, few-shot learning highlights how much progress remains to match human-level adaptability and generalization. However, advances in few-shot learning bring us steps closer to more flexible, general, and reusable artificial intelligence.

Outlook

According to a 2021 survey of few-shot learning researchers, the top ways the field could progress are:

- New model architectures specialized for few-shot tasks

- More sophisticated meta-training algorithms

- Better benchmarks and evaluation protocols

- Insights from cognitive science

- Combining multiple methods into a unified framework

The consensus is that hybrid approaches that integrate strategies like metric learning, optimization-based meta-learning, data augmentation, transfer learning, and specialized model architectures hold the most promise going forward.

Conclusion

In this comprehensive guide, we explored the rapidly evolving field of few-shot learning – a paradigm for training AI models to learn new concepts from scarce data similar to human abilities.

We covered the motivations behind few-shot learning, major applications, how few-shot models work, state-of-the-art algorithms, implementations, challenges, and the outlook for the field. While significant research problems remain, few-shot learning represents an exciting step towards more efficient, flexible, and ubiquitous artificial intelligence systems.