Machine learning (ML) models increasingly rely on sensitive personal data for training. As these models are deployed for facial recognition, personalized recommendations, and other applications, valid concerns have emerged around individual privacy. Studies have shown deep neural networks memorize specific examples from raw training data. If those examples include personal information, it exposes individuals‘ privacy by enabling attackers to steal private data through techniques like membership inference attacks, reconstruction attacks, and property inference attacks.

Differential privacy is a rigorous technique that introduces calibrated noise into ML models to prevent such privacy violations while maintaining high accuracy. As regulations tighten and users demand more accountability around AI ethics, differential privacy will continue growing in importance as a way to build secure and ethical ML systems.

The Urgent Need for Differential Privacy

ML models work by finding patterns in training datasets. However, research has demonstrated that deep neural networks also memorize particular training examples. An analysis across computer vision models like AlexNet and ResNet found that simple image classifiers memorize up to 10-30% of unique examples from training sets. More complex models and larger datasets lead to even higher memorization rates.

This observation combined with the prevalence of personal data used to train models — faces, voices, location information, browsing history — creates unacceptable privacy risks. Multiple studies have now shown how attackers can exploit model memorization to steal private data through techniques like:

-

Membership inference attacks – determine if someone‘s data was part of the training set with over 90% accuracy in some cases.

-

Reconstruction attacks – reconstruct images, text, and other private data from training sets by analyzing model behavior.

-

Property inference attacks – deduce properties about training data itself, like the relative number of examples belonging to each class.

A striking example comes from a facial recognition study where researchers managed to accurately recreate images of individuals‘ faces using only their name and API access to the model.

In this reconstruction attack, the recovered facial image (left) closely matched the individual‘s original image from the training set (right). Source: CMU

These types of attacks pose fundamental risks to individual privacy and demonstrate the urgent need for solutions like differential privacy in machine learning pipelines.

How Differential Privacy Protects ML Models

Differential privacy works by intentionally adding noise to the data or model to obscure any individual example from the training set. This makes ML models capable of learning generalizable patterns from the data while provably protecting privacy.

There are three primary techniques to implement differential privacy:

Input Perturbation involves adding calibrated random noise to individual data points before model training. This ensures the influence of any single example gets masked.

Output Perturbation adds controlled noise to the model outputs or predictions before release. This obscures contributions from specific training examples.

Algorithm Perturbation injects noise during model training to the parameters like weights and biases. This prevents memorization of examples in the learned parameters.

The key to differential privacy is calibrating the right amount of noise to maximize accuracy and utility while upholding a rigorous privacy guarantee. The formal privacy definition states that adding or removing any single example from the training set produces statistically indistinguishable model outputs. The epsilon parameter tracks the privacy budget — lower epsilon means more privacy and noise.

# Example of differentially private SGD optimizer with clipped gradients

optimizer = dp_optimizer.DPGradientDescentGaussianOptimizer(

l2_norm_clip=1.0,

noise_multiplier=0.5,

num_microbatches=500,

learning_rate=0.15,

epsilon=2.0)This injected noise crucially prevents attackers from reverse engineering the training data while allowing the model to maintain high utility. Under differential privacy, memorization of any single training example is provably bounded even for adversarially chosen examples.

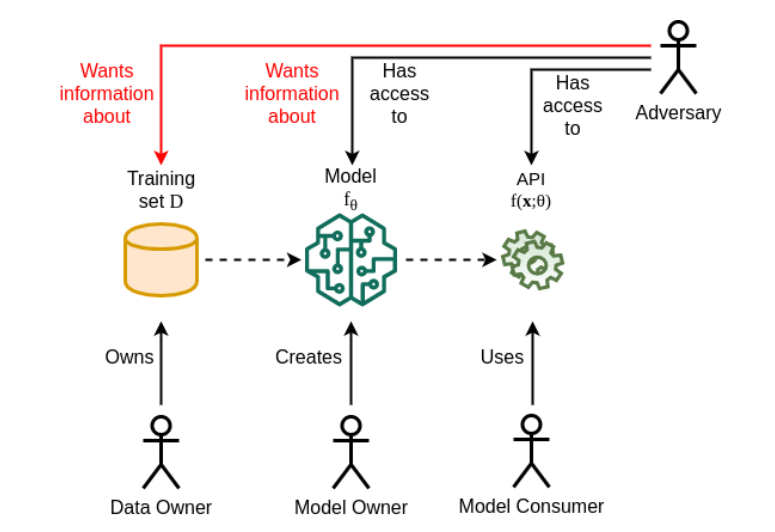

With differential privacy, noise overwhelms contributions from any individual example to make privacy attacks infeasible. Source: A Survey of Privacy Attacks in Machine Learning

In 2023 and beyond, differential privacy serves as an essential component of responsible and ethical machine learning by preventing misuse of sensitive training data. Leading technology companies like Google, Apple, Microsoft, and Uber already employ differential privacy techniques to address valid privacy concerns from regulators and consumers.

Critical Role in Federated Learning

Federated learning is a distributed approach for training ML models on decentralized data from many users or devices while keeping data localized. This enhances privacy by avoiding direct data collection. However, studies have shown federated learning systems can still be vulnerable to certain privacy attacks.

For example, an attacker may be able to identify properties about an individual user‘s local dataset based on patterns in that user‘s model updates. If a user‘s local update diverges significantly from the global model, it likely indicates unique properties about that user‘s local training examples. While aggregation across users masks this signal, a user who contributes very unique local data may get partially de-anonymized.

Differential privacy can help by adding noise to the local updates before they get aggregated into the global model. This ensures privacy even in the federated setting. Furthermore, combining federated learning and differential privacy takes advantage of both techniques — keeping data decentralized while provably bounding privacy leakage.

Researchers have developed techniques like applying differential privacy at both the user-level and server-level to optimize the privacy-utility tradeoff in federated learning. These advances will enable secure real-world applications like predictive healthcare analytics across hospitals and personalized next-word prediction across millions of private mobile devices.

Growing Adoption to Meet Privacy Demands

Many leading technology providers have started integrating differential privacy techniques into their machine learning pipelines and offerings. Google enabled differential privacy in its Chrome browser back in 2016 to power analytics while protecting users‘ privacy. Apple has deployed on-device differential privacy since iOS 14 to gather usage statistics that inform features like QuickType and emoji suggestions while keeping personal information encrypted.

Facebook also developed an end-to-end differentially private analytics pipeline called PrivateLift that runs directly on user devices. Microsoft‘s Census tool leverages differential privacy for secure data analysis. And Uber uses it to analyze rider behavior without compromising privacy.

Differential privacy can be applied at various stages of the machine learning pipeline. Source: Apple

In 2023, we will see differential privacy become a standard part of ML pipelines at more technology companies and health organizations to address valid privacy concerns from consumers and regulators. Conferences like NeurIPS now regularly host workshops dedicated to differential privacy research and applications.

Frameworks like TensorFlow Privacy, PySyft, Opacus, and Google‘s Differential Privacy Library make implementing differential privacy more seamless. The active research happening in this space will continue enhancing the utility-privacy tradeoff. For example, techniques like Adaptive Differential Privacy automatically tune noise levels. And continual release of curated datasets like Differential Privacy Synthetic Data provides safe, private data for model training and testing.

Moving forward, differential privacy serves as a rigorous technique for addressing the privacy risks inherent in modern machine learning — enabling data scientists and organizations to develop secure, ethical models that provably protect individuals‘ privacy.