Artificial intelligence (AI) is transforming our society in profound ways, from healthcare to transportation to how we work. With this rapid mainstream adoption, misconceptions abound about what AI really is and what it can and can‘t do today. Getting an accurate picture avoids inflated expectations as well as unreasonable fears.

As an AI specialist with over 15 years in data science and machine learning, I find myths persist around AI capabilities, objectivity, job impacts, limitations, and potential risks. Let‘s demystify AI by exploring the top five misconceptions:

Myth 1: AI Will Achieve Human-Level Intelligence and Take Over the World

Perhaps no myth has captured the public imagination more than the scare scenario of AI surpassing human intelligence and capability. Movies like Terminator embed fears of AI turning against people in doomsday scenarios.

The reality is that current AI exhibits narrow intelligence – it can excel at specific tasks like playing chess or Go, but lacks generalized reasoning, common sense, and adaptability. While great strides have been made in domains like computer vision and natural language processing, today‘s AI still struggles with many simple things toddlers can do.

According to AI experts like Andrew Ng, AI is akin to electricity – a powerful tool that enhances our lives greatly, yet is far from human intelligence. Even reaching human capability in a single area remains distant. The risks from advanced AI known as artificial general intelligence (AGI) remain hypothetical.

The typical AI systems powering business applications today are designed to enhance and assist human capabilities, not replace people entirely. AI excels at crunching numbers, finding patterns and completing rote tasks. It does not seek to take over the world like a supervillain.

AI today has narrow applications, not human-level general intelligence. (Source: marketingscoop.com)

Despite the hype, true artificial general intelligence on par with people remains far off. With responsible governance and ethics practices, we can harness AI to enhance our lives tremendously without uncontrolled risks.

Myth 2: AI Makes Perfectly Objective and Fair Decisions

Another common myth is the assumption that algorithmic decisions must be inherently objective and fair, free from human biases. With AI handling high-stakes tasks like credit lending, hiring, healthcare and criminal justice, this myth can be very dangerous if taken at face value.

The reality is AI systems reflect the biases and limitations of the data used to train them and the people who build them. Unexamined historical training data often bakes in past prejudices and discrimination. For example:

-

Word embedding models can inherit harmful gender and racial biases if trained on text corpuses containing stereotyped language.

-

Facial recognition algorithms have higher error rates for women and darker skinned individuals if trained on unrepresentative datasets.

-

Hiring algorithms can discriminate against women if historical hiring data feeds the model biased associations between gender and competency.

In my consulting experience, clients are often surprised that their AI models pick up biases they were not aware of in their data. It takes proactive testing and mitigation practices to address fairness issues.

Here are three types of biases that can manifest in AI systems:

Historical Bias: Models that learn relationships from historical data may inherit and perpetuate past biases like gender or racial discrimination.

Representation Bias: Training data that under represents certain groups will perform worse on those groups.

Measurement Bias: Poorly chosen performance metrics and feedback loops can skew model behavior toward biased outcomes.

To build trustworthy AI, we must ensure data diversity and inclusiveness, extensively test for fairness, and implement responsible model governance. It is unwise to assume AI will make perfect decisions without oversight. Achieving ethical AI remains an ongoing challenge.

Myth 3: AI is a Black Box, Impossible for Humans to Understand

Another common myth paints AI systems, especially deep neural networks, as opaque black boxes impossible for even their creators to understand. The fear is that we are unleashing uncontrollable technologies.

In reality, while deep learning has billions of parameters making interpretation challenging, AI is built on mathematical models that data scientists can inspect, analyze and refine. There are two problems hampering transparency:

Model Complexity: State-of-the-art deep learning models have grown massively in size and complexity. For example, OpenAI‘s GPT-3 model has 175 billion parameters.

Lack of Investment: Most resources are invested in building models, with fewer devoted to explaining them. Interpretability tools also tend to lag cutting edge accuracy.

However, various techniques already exist to improve AI transparency and explainability:

-

Visualization tools can illustrate how neural networks operate and the importance of different components.

-

Sensitivity analysis observes how tweaking inputs changes outputs to infer relationships.

-

Local approximation methods explain individual predictions by approximating the model locally.

-

Simpler models like decision trees are sometimes used for greater explainability.

Domain expertise is still critical to evaluate whether a model‘s logic is sound or merely correlational. Transparency should continue improving as explaining AI becomes an equal priority to building it. We must dismiss the myth that achievement of trustworthy AI is impossible due to black box opacity.

Myth 4: AI Will Eliminate Millions of Human Jobs and Livelihoods

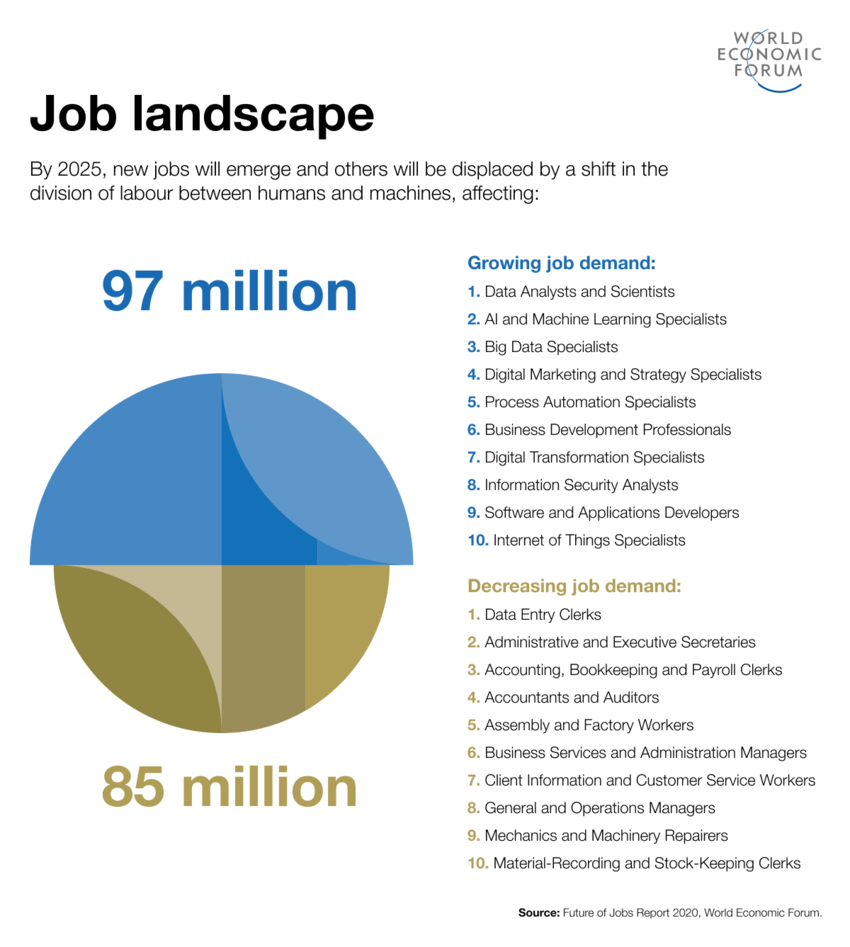

Among the most feared myths is the scenario of AI automation leading to mass workforce displacement and unemployment. Analysts have put out varying estimates of roles at risk, from hundreds of millions to nearly half of all jobs.

While it‘s true AI will transform many occupations, the net employment impacts are more nuanced according to studies by the OECD and World Economic Forum:

-

Although some roles will decline, many more will be augmented and redefined with AI assistance rather than wholesale replacement.

-

New professions will emerge to support AI development like data analysts, ML engineers, trainers, and transparency specialists.

-

Most jobs involve a complex blend of tasks, many requiring adaptability and social intelligence where humans excel over machines.

-

Economic growth from AI is expected to spur job creation and entirely new types of work we cannot envision today. Past technology revolutions have not led to long-term rises in unemployment.

According to Gartner, while AI will eliminate 1.8 million jobs by 2025, it will also create 2.3 million new jobs through augmentation and productivity gains. Rather than a tech apocalypse, we are likely to see an ongoing adaptation of the labor market as happened with previous technological shifts.

However, displaced workers without retraining opportunities face significant hardship risks. Investing into education, social safety nets, job transition support and inclusive growth policies can ensure the benefits are broadly shared.

AI will transform more jobs than it eliminates, creating new human+AI roles (Source: World Economic Forum)

Myth 5: AI Will Only Impact Lower-Skilled Jobs, Not Professionals

A common assumption is that AI automation primarily threatens repetitive, routine jobs often requiring lower skills and education levels. By extension, "skilled" roles like doctors, bankers, and lawyers are believed to be immune.

In reality, many specialized occupations stand to be profoundly transformed by infusion of AI capabilities. Rather than full automation, we‘ll see extensive augmentation with professionals teaming up with AI systems:

-

Radiologists are partnering with AI diagnostic assistants to improve detection of abnormalities from medical images.

-

Lawyers apply "e-Discovery" tools to rapidly analyze thousands of legal contracts for relevant details.

-

Portfolio managers complement their financial insights with robo-advisor algorithms to enhance performance.

-

Engineers simulate designs with physics-based AI models prior to physical prototyping.

As machine learning capabilities grow, virtually any information intensive role is fertile for assistance – and disruption. As Stanford professor Curtis Langlotz aptly states, “AI won’t replace radiologists, but radiologists who use AI will replace radiologists who don’t.” This likely applies to most professions.

Rather than be displaced, professionals should proactively evaluate how AI technologies can augment their expertise and effectiveness for the benefit of their customers and organizations.

Key Requirements for Advancing AI Progress Responsibly

Getting an accurate picture of AI‘s current abilities, limitations and misconceptions empowers us to deploy these potent technologies thoughtfully and safely. While AI achievements have been impressive, responsible development remains critical.

Based on my industry experience, here are four key requirements for advancing AI progress responsibly in the years ahead:

-

Transparency & Auditability: Making AI systems explainable and providing visibility into their decision-making processes builds understanding and trust.

-

Testing & Measurement: Extensive testing across diverse real-world conditions, and measuring for unintended consequences like biases, helps Flag issues early.

-

Governance & Oversight: Institutionalizing processes for risk-based AI reviews, impact assessments and monitoring ensures accountability.

-

Skills & Literacy: Broadening AI skills and literacy within organizations and the public fosters thoughtful adoption.

Meeting these responsibilities requires collaboration between companies deploying AI, governments setting policy, and research institutions furthering innovations. With ethical AI anchoring progress, we can minimize risks and harness the abundant opportunities.

The Future of AI is Collaborative

Dispelling the most stubborn myths about artificial intelligence helps calibrate expectations about its current abilities and limitations. While AI achievements are already remarkable, the technology requires responsible governance and collaboration between technologists, domain experts, and society to fulfill its potential.

Already, AI systems are enhancing productivity and living standards across industries. Yet key challenges remain, from avoiding baked-in biases to maintaining transparency and control to preparing society for workforce changes. Through partnership, we can steer AI‘s future trajectory responsibly.

Rather than AI running amok, the real near-term risk is irresponsible use that fails to weigh benefits and risks thoughtfully. By grounding development in ethics and human primacy, we can write the next amazing chapter in shared prosperity.